Most development post-mortems happen soon after the development phase of the project - preferably right before all of the developers depart for other projects (or companies) and leave someone else to maintain and support the software. But what would a post-mortem look like if you considered the entire software lifecycle instead of focusing exclusively on the development phase? You might find that some issues that seemed crucial during the path to release: choice of development tool, team personalities or even design decisions, turn out to be quite insignificant in the long run. And what seemed like afterthoughts during development might turn out to have had enormous consequences as time passed.

In late 2002 we started developing version 1.0 of a licensing system for the .NET 1.0 Framework. Originally a project for in-house use (our third take on licensing); it became quickly apparent that it could be useful to .NET developers in general, and the scope changed into a commercial product. Seven years later and after five point releases, we are releasing version 2.0 of the product - so it is a perfect time to look back at the 1.x project successes and “challenges.”

Things that Went Well

Instrumentation

Back in 2002 I was a frequent speaker at various conferences on .NET topics. While other speakers tended to focus on the flashier aspects of Visual Studio, I always enjoyed “nuts and bolts” talks on less popular aspects of .NET. One of the talks I did was on the System.Diagnostics namespace and all of the new features .NET had for instrumenting applications. I don’t know if it was foresight or the fact that diagnostics happened to be on my mind, but I did decide to incorporate those features and add configurable instrumentation to the licensing software.

It may have been the single best design decision I’ve ever made. It turns out that as painful as deploying desktop applications can be, it’s nothing compared to the complexity of deploying server applications. Not only are there a nearly infinite number of ways to configure servers, the numbers increase with every OS and service pack release. That we’ve never found a server we couldn’t get our software to run on is purely a result of that early design choice. The instrumentation was also invaluable in detecting some obscure bugs (more on that later).

Lesson learned: Instrumentation is not an afterthought. For version 2.0 we took advantage of diagnostics features introduced in later versions of the framework and built our own XML-based trace listeners. Not only do we capture more information, but we have better ways of retrieving it, displaying it, and logging it.

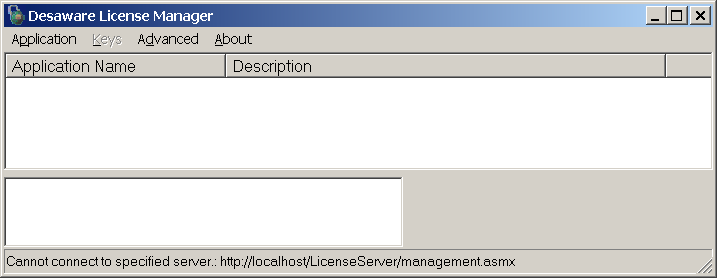

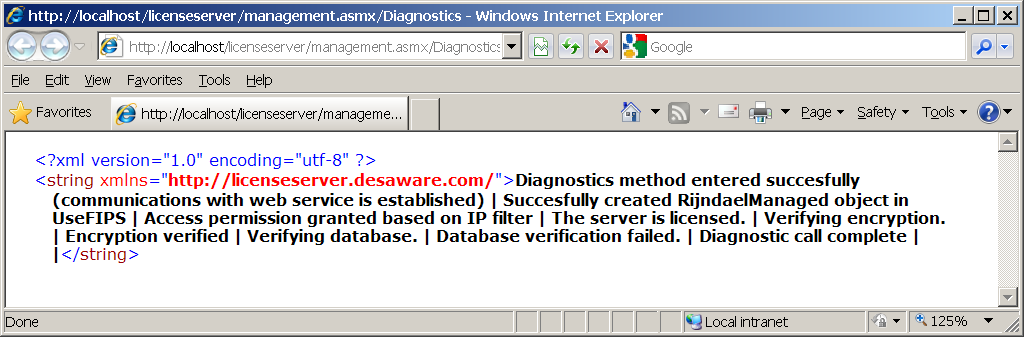

Figure 1 shows the original license manager application connecting to a server in which the database has intentionally been misconfigured. The diagnostics allow the application to know that an error occurred, but you have to go to the diagnostic web page as shown in Figure 2 to obtain specific information about the error. And even then, the information is limited.

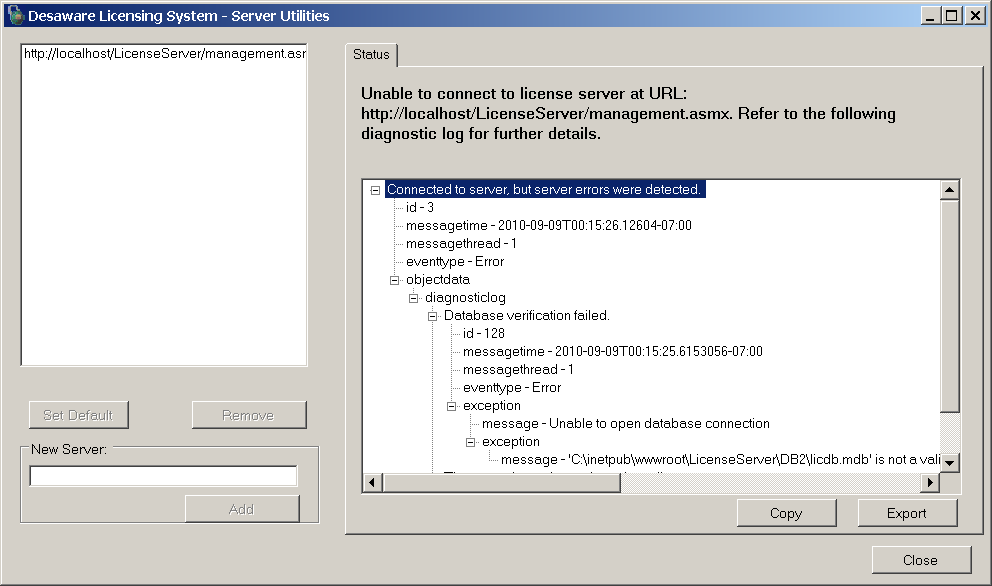

Figure 3 shows how the transition to XML diagnostics not only allows us to capture more information; it supports a diagnostic user interface that makes it possible to not only view the error, but to drill down into specifics of the underlying exception that triggered the error in the first place.

Reliability and 64-bit Support

It’s odd that two of the greatest successes of the project were not due to any design choices, foresight or particular brilliance on our part, but an unexpected consequence of choosing to build on the .NET Framework.

Remember, in those days the standard platform for development was either C++ (with MFC or ATL) or VB6. It was the era of invalid pointer references, COM reference counting errors, memory leaks and buffer overflow-based attacks and crashes. Migrating software to 64 bits in C++ was non-trivial (and in VB6 impossible). The .NET Framework was very new and promised answers to these problems - but they were just promises.

Eight years later, I am still astonished by the degree to which .NET has delivered on those promises. The best illustration of this is the licensing server itself: it just works - no memory leaks, strange crashes, or side effects. And while it’s true that our biggest technical support issue is getting it configured on a particular server, once configured it just keeps working.

And when 64-bit operating systems became popular, we did some testing and found that both the client components and the server software would run natively as 64-bits - all for free.

What’s in a System?

The original project scope was a licensing system designed to license software for a particular machine. Like any such licensing system that is activation based, we had to answer a question: when are two systems identical? We all experience this with Windows. You can upgrade or swap some hardware on a machine, but at some point Windows will decide that you’re on a new system and require you to reactivate your software.

The problem was: we didn’t know how to answer the question of whether two systems are identical. In fact, we still don’t know the “right” answer to this question.

The problem was: we didn’t know how to answer the question of whether two systems are identical. In fact, we still don’t know the “right” answer to this question. So we decided to cheat: we built in a very simple and not very tight algorithm for comparing systems, but we allowed customers to redefine both the “identifiers” that one can use to identify a system (things like the network card address, system name, etc.) and the algorithms used to compare two systems. We left it to customers to decide for themselves how many changes they would tolerate in their client’s systems.

And they did. But that wasn’t the surprise. What we did not expect or completely realize at the time was that you could use these system identifiers to identify things that had nothing to do with an actual computer. Next thing you know, we had customers implementing per-user licensing, or domain-based licensing for web services, or even using USB network adapters to provide cheap dongle-based licensing.

Lesson learned: For version 2.0 we beefed up the built-in system with some new identifiers, a tighter default comparison algorithm, two other built-in algorithms, and a way to provide some customization to the comparison algorithms without writing code. We also made it possible for custom algorithms to call our built-in routines. I can’t wait to see what people will decide to license next.

Extensibility

On the original system, we thought it might be useful for there to be a way for the server to call external code so that you could build extensions that kept track of licenses that were issued, and even embed some data into a license certificate when it was activated. This was almost an afterthought.

You see, we thought we knew how people wanted to license software (at least, we knew how we wanted to license software, and extrapolated from there). So we built a system that implemented those scenarios. As time went on we came to realize how naïve we were - it’s not that we missed important scenarios. Rather, it turned out that everyone seems to have their own approach to licensing - the possible scenarios were endless. For example: just recently I received an inquiry from a developer who wants to allow his software to be rented in seven-day increments until purchase.

The simple extensibility model we built in version 1.0 turned out to be incredibly important - it made the system far more adaptable than we had ever expected, and was critical to the success of the product.

Lesson learned: Version 2.0 has relatively few changes to the core licensing system, but a completely new extension model and plug-in API. In fact, most of the new features for 2.0 are implemented as plug-ins using the new extensibility model (eating our own dog food as it were). While we were at it, we made the license manager application extensible as well.

While it’s far too soon to say if it will be able to handle every scenario our extraordinarily creative customers can come up with, at least we know the seven day rental until purchase scenario can be implemented - several different ways.

Web Service Architecture

Web services were all the rage back in 2002, and while I was slightly skeptical of the technology at the time, it turned out to be the obvious choice for communicating with the licensing server. In fact, the license manager application was little more than a simple shell application that made web service calls to the licensing server. Our thought was that by taking this approach we would make it easy for people to integrate licensing into their back-end systems. We guessed right. Many customers don’t even use our license manager application past the development stage. They choose to use standard SOAP calls to issue and track licenses from their CRM system, Salesforce, or Ecommerce software.

Lesson learned: Yes, I know that old fashioned SOAP web services are almost hidden in today’s Visual Studio behind the featured WCF capabilities, but the outside world speaks SOAP and REST. So for version 2.0 we didn’t change a thing aside from adding some new management functions to support the new features.

Challenges

KB948080

Bugs are annoying. Intermittent bugs are more annoying. Intermittent bugs that only happen on a very few systems with nothing apparent in common are incredibly annoying. And as for intermittent bugs that are deep within the .NET Framework… If you’ve ever been in that situation, you know there are no words to adequately describe the experience.

At some point in 2006, we started getting very sporadic reports of long delays when verifying signed XML certificates. We finally found one customer who could reproduce it on a system and was patient enough to work with us as we sent multiple custom versions of our software to test (where each version had additional instrumentation and experimental timing code to help us figure out what was taking so long).

Finding the problem was without doubt our single greatest challenge with the system. Ultimately it involved using Reflector to decompile a large portion of the signed XML verification and RSACryptoProvider framework code, digging through the code, and working directly with one of the Microsoft developers involved with that portion of the framework. I suspect it was part of our work that led to KB948080.

It turns out that there is a table of cryptographic object identifiers (OIDs) stored or cached within the framework that describe the various available cryptographic algorithms on a system and map from OID numeric values to the name of the algorithm. In fact, there are two such tables, a default table containing a list of default names and mappings, and a machine table that is normally empty. The VerifyData method used to check a digital signature performs a lookup query if the algorithm mapping is not already in the machine table - it doesn’t look first at the default table. In certain situations when an application is running on a local user account on a machine that is part of a domain with certain undefined DNS settings, those lookups will fail with long timeouts (after which the system will use the default value that was there all along).

The knowledge base article (which, of course, did not exist at the time) now recommends using the VerifyHash function instead of VerifyData to check a digital signature. VerifyHash uses the default mapping or one you specify, bypassing the need for a lookup query. Unfortunately, the Signed XML .NET libraries use VerifyData internally - and that usage can’t be overridden.

Unfortunately, the Signed XML .NET libraries use VerifyData internally - and that usage can’t be overridden.

We really didn’t want to rewrite the entire .NET signed XML library. And asking users to modify their machine.config file to explicitly set the machine OID mapping table was not really an option. Ultimately, as a workaround we used reflection to access the internal tables within the .NET Framework and copy the default values into the machine table to prevent a network lookup from taking place.

Lesson learned: Frameworks offer great benefits, but you become dependent on them as well. Having the patience and resources to look inside and create your own workarounds to problems can be critical - but it takes the ability and willingness to dig deep. No matter how good a framework is, you’re going to need people on call who have the ability to do advanced work.

Serialization

Backward compatibility is important. Maybe even sacred. One of the things we are proudest of is that our version 2.0 licensing server will activate certificates issued by our software going back to version 1.1, on frameworks from 1.1 through 4.0.

But if anyone out there is still using the version 1.0 licensing system on the 2.0 framework, and have ignored all of our warnings over the years to upgrade their server, they’re out of luck. One of the things we did when storing dates in our original license certificate format was to serialize them and encrypt them. But we used binary serialization at the time (it being easy to encrypt a byte array). That worked fine until the 2.0 framework was released and Microsoft changed the binary serialized format for dates. Ooops. We updated the system to store the date using both binary and XML serialization, and were able to handle most situations, but those running the 1.0 code on the 2.0 framework who then tried to activate the resulting certificate on a 1.0 server were toast.

Lesson learned: XML serialization is nice. Binary serialization is not.

Versioning Hell

You may have noticed a certain theme developing with regards to challenges. It’s easy enough (relatively speaking) to test your software against a particular operating system and framework version. It is boring but necessary to test against older operating systems and frameworks. But how do you test your software against operating systems and framework versions that don’t yet exist? Obviously you don’t. But when dealing with the entire software lifecycle, it becomes quickly clear that platform versioning issues are one of the greatest long term challenges that any software developer faces.

My wildest experience with this was a scenario that came about during the .NET 2.0 release period (one which I wish I could still reproduce, if only for its entertainment value). We were seeing sporadic crashes within the .NET Framework itself. This made absolutely no sense.

It turned out to be somehow related to using our .NET 1.0 or 1.1 components from a .NET component in a .NET project that targeted .NET 2.0. Somehow the system ended up loading some assemblies from .NET 2.0 and some from .NET 1.x at the same time. The different assemblies, unsurprisingly, did not play well together, and the software would crash at seemingly random places within the framework.

Lesson learned: Bind your software to one version of the framework (using configuration files), and make sure all of the components you use target that version of the framework. Oh, and keep in mind that versioning with .NET can’t be evaluated just by version number: version 3.5 is just 2.0 with some new features, while .NET 4.0 is a completely new version.

Server Installation

A “challenge” can perhaps be best thought of as a failure that has been overcome. A failure then would be a challenge that has not been overcome. Software installation (particularly on servers) is not so much a challenge or failure as it is a chronic condition - not quite a failure, but never a real success. Statistically over 90% of our technical support has to do with configuring servers.

For those of you who have not had the pleasure of distributing server software, allow me to share a few of the highlights.

First there are the normal compatibility issues that relate to operating system evolution: From Windows 2000 to Windows 2008 R2 you have all of the changes we’ve seen on the desktop from Windows 2000 to Windows 7: the UAC issues, registry virtualization, and general compatibility issues.

The license server is a standard ASP.NET web service application, but there is nothing standard about ASP.NET. Between IIS 5.1 and 7.5, there is a whole list of issues to deal with:

- The default identity for the web application varies from ASPNET to NETWORK SERVICE to a unique identity for each application pool. You need to use the right one when configuring security settings.

- You have to make sure the correct version of ASP.NET is selected for your application. Come to think of it, you have to make sure ASP.NET is enabled for the application in the first place. In fact, you have to make sure IIS is enabled - standard installations of current servers do not install the IIS role by default.

- The Windows installer likes to have IIS6 metabase compatibility enabled on IIS 7 and later (it isn’t by default).

- You can use WMI to configure IIS6, but it may not be enabled for IIS7 - which has its own API in the Microsoft.Web.Administration namespace. We ended up writing duplicate administrative code for each technology.

- The licensing server is used to manage licenses, not just activate them. The activation interface has to be open to the public and open to anonymous users for obvious reasons. For equally obvious reasons, the management interface has to be secure. The approach for securing individual files changed between IIS6 and 7. In II6 this task is handled in the metabase, in IIS7, in the web.config file. Be sure to make sure authentication is enabled as an IIS feature in IIS7 - it isn’t by default.

- Security lockdown tools are nice (though nobody is quite sure of all of the things they do). One thing they often do is limit the trust settings on a site. The license server does need to run in full trust (all that encryption and serialization has problems in partial trust scenarios).

- And then there is SQL server. The user identity for the web application (the one whose default value keeps changing) has to have both login and schema permissions for the database - sufficient permissions to create and alter tables, as the license server takes care of that both during initial install and upgrades between versions.

We’ve never actually calculated the number of possible permutations of operating system, IIS version, SQL server version, and server role/feature settings that are possible. I’m afraid the number would be far too depressing. It is a race between Microsoft’s ability to increase the number of permutations, and our ability to handle them - one in which we are destined to always come in second. Hopefully, a close second.

Lesson learned: you may not be a server IT guru when you start distributing server-based software, but you’ll need to become one quickly, whether you like it or not.

Balancing Features and Complexity Against Ease of Use

Everyone loves features. Or so they say. But every time you add features to software, you add complexity. In most cases you add to the learning curve. In the case of licensing software, you almost always add to the attack surface.

One of the greatest challenges we’ve faced over the years has been how to deal with feature requests - especially features that would arguably only benefit one or two customers. The cost of a feature always goes beyond the cost to develop it - there’s the cost to document it and support it, and the cost borne by other customers to learn it (if only enough to decide whether or not they need it).

When we started developing version 2.0, we had a long shopping list from customers, and we initially started implementing that list. That turned out to be a mistake - it quickly became apparent that by adding those features we were putting the stability of the entire system at risk - it was just getting too complex.

So we did something that while painful, was I think necessary. We reverted back to the previous version 1.6 codebase and threw out all of the changes. Instead of implementing those changes, we implemented an extensibility model and metadata management model that had a minimal impact on the core system (which was very stable). Then we went back to the shopping list and implemented most of the requested features using the extensibility model. One result of this approach is that users need only install those features they need, and don’t need to learn or worry about those they don’t.

Lesson learned: If you’re halfway through a project and suddenly realize you’ve made a terrible mistake, don’t be afraid to face it. Sure, circumstances or management may require you to plod ahead and hope you can patch things together. But when you consider the complete lifecycle costs of an application, you may find that going back and doing things correctly is not only the “right” choice, but the most cost effective as well.

Conclusion

I honestly don’t remember the challenges we faced back in 2002 and 2003 when developing the original licensing system. I know we put a lot of time into design, and while I’m convinced that time was well spent, I’m humbled by the fact that many of our greatest successes turned out to be as much due to happenstance as foresight.

I do know that today when I read the debates about various software tools and techniques, and the latest technologies and language features, I don’t take them as seriously as I once did. In the long run, it’s the basics that count most. Design, architecture, testing, instrumentation and documentation are going to matter more than the particular language or tool you used, or the drama that took place within the development team at the time. And no matter how good a job you do, the world is going to keep changing and throwing unanticipated challenges at your software - so do the poor soul who will be supporting your software a favor, and keep things as clear, simple and well documented as you can. You never know - that poor soul might turn out to be you.

| Project Facts |

|---|

| Original release date version 1.0: Approximately May 2003. Point releases every year thereafter. Version 2.0 release October 2010. |

| Platforms and technologies supported over the years: Windows 2000 (workstation and server), XP, Vista (x32, x64), Windows 7 (x32,x64), Windows 2003 (x32,x64), Windows 2003R2 (x32, x64), Windows 2008 (x32, x64), Windows 2008R2 (x32,x64). .NET Frameworks 1.0, 1.1, 2.0-3.5 and 4.0. All but Windows 2000 and .NET 1.0 are still supported. UI support: Windows Forms, WPF and ASP.NET Web forms. Web servers: IIS 5.1, 6, 7, 7.5. Databases: Access/JET, SQL Server Express and full, MySQL via ODBC (not politically correct, but useful if you’re running the server on an inexpensive shared host). |

| Number of virtual machines currently used in testing: Approximately 20 |

| Core language: Visual Basic .NET. Samples provided in VB .NET and C# |

| Key tools: Visual Studio, MSDN, Google, Reflector, VMWare and Virtual PC, Helpsmith |