The .NET class Thread defined in the System.Threading namespace represents a managed thread.

The Thread class provides various methods and properties to control the managed thread. Unfortunately, there is a significant potential for abusing these mechanisms, and most developers may not even realize they are doing anything wrong. This article describes the dos and don'ts of the Thread class, and then presents a wrapper class that simplifies starting a thread, correctly terminates a thread, and offers a more consistent class interface than that of the raw Thread class.

Managing Thread ID

You can get hold of the current thread your code runs on using the CurrentThread read-only static property of the Thread class:

public sealed class Thread

{

public static Thread CurrentThread { get; }

// Other methods and properties

}

The CurrentThread property returns an instance of the Thread class. Each thread has a unique thread identification number called thread ID. You can access the thread ID via the GetHashCode() method of the Thread class:

Thread currentThread = Thread.CurrentThread;

int threadID = currentThread.GetHashCode();

Trace.WriteLine("Thread ID is "+ threadID);

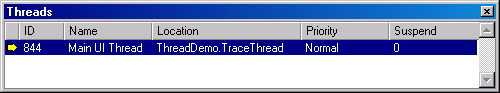

Thread.GetHashCode() is guaranteed to return a value that is unique process-wide. It is worth mentioning that the thread ID obtained by GetHashCode() is unrelated to the native thread ID allocated by the underlying operating system. You can verify that by opening the Thread's debug window (under Debug|Windows) during a debug session, and examining the value of the ID column (see Figure 1). The ID column reflects the physical thread ID. Having different IDs allows for .NET threads in the future to map differently to the native operating system support. If you need to programmatically access the physical thread ID your code runs on, use the static method GetCurrentThreadId() of the AppDomain class:

int physicalID = AppDomain.GetCurrentThreadId();

int hashedID =

Thread.CurrentThread.GetHashCode();

Debug.Assert(physicalID != hashedID);

Another useful property of the Thread class is the Name string property. Name allows you to assign a human-readable name to a thread:

Thread currentThread = Thread.CurrentThread;

string threadName = "Main UI Thread";

currentThread.Name = threadName;

The thread name can only be assigned by the developer, and by default, a new .NET thread is nameless. Although naming a thread is optional, I highly recommend doing so, because it is an important productivity feature. Windows does not have the ability to assign a name to a thread. In the past, when developers debugged native Windows code, they had to record the new thread ID in every debugging session (using the Threads debug window). These IDs were not only confusing (especially when multiple threads were involved) but also changed in each new debugging session. The Thread's Debug window of Visual Studio .NET (see Figure 1) displays the value of the Name property, thus easing the task of tracing and debugging multithreaded applications. In addition, when a named thread terminates, VS .NET will automatically trace to the Output window the name of that thread, as part of the thread's Exit method.

The name is a good example of a managed-code property that .NET adds to native threads.

Creating Threads

To spawn a new thread, you need to create a new Thread object, and associate it with a method, called the thread method. The new Thread object executes the method on a separate thread. The thread terminates once the thread method returns. The thread method can either be a static or an instance method, a public or a private one, on your object or on another. The only requirement is that the thread method has this exact signature:

void <MethodName>();

That is, no parameters and a void return type. You associate a Thread object with the thread method using a dedicated delegate called ThreadStart, defined as:

public delegate void ThreadStart();

The Thread class constructor accepts as a single construction parameter an instance of the ThreadStart delegate, targeting the thread method:

public sealed class Thread

{

public Thread(ThreadStart start);

//Other methods and properties

}

Once you create a new thread object, you must explicitly call its Start() method to have it actually execute the thread method. Listing 1 demonstrates creating and using a new thread.

Calling the Start() method is a non-blocking operation, meaning that control returns immediately to the client that started the thread, even though it may be some time later (depending on the operating system internal threading management) until the new thread actually starts. As a result, do not make any assumptions in your code (after calling Start()) that the thread is actually running.

Good thread design suggestions:

Although you should only have one thread method as a target for the ThreadStart delegate, you could associate it with multiple targets, in which case the new thread will execute all the methods in order, and the thread will terminate once the last target method returns. However, there is little practical use for such a setting. In general, you should only have one target thread method.

Designing Thread Methods

A thread method can do whatever you want it to, but typically, it will contain a loop of some sort. In each loop iteration, the thread performs a finite amount of work, and then checks some condition, letting it know whether to perform another iteration or to terminate:

public void MyThreadMethod()

{

while(<some condition>)

{

<Do some unit of work>

}

}

The condition is usually the result of some external event telling the thread that its work is done. The condition can be as simple as checking the value of a flag, to waiting on a synchronization event. The condition is usually changed by another thread. As a result, changing and verifying the condition must be done in a thread-safe manner, using threading synchronization objects.

Passing Thread Parameters

The constructor for the Thread class does not accept any parameters except the ThreadStart delegate, and the thread method itself takes no parameters. If you want to pass parameters to the thread method, you need to set properties on the class that provides the thread method, or have some other custom way, such as having the method retrieve its parameters from a known location planned in advance.

Blocking Threads

The Thread class provides a number of methods you can use to block the execution of a thread, similar in their effect to the native mechanisms available to Windows programmers. These include suspending a thread, putting a thread to sleep, and waiting for a thread to die.

Suspending and Resuming a Thread

The Thread class provides the Suspend() method, used to suspend the execution of a thread, and the Resume() method, used to resume a suspended thread:

public sealed class Thread

{

public void Resume();

public void Suspend();

//Other methods and properties

}

Anybody can call Suspend() on a Thread object, including objects running on that thread, and there is no harm in calling Suspend() on an already suspended thread. Obviously, only clients on other threads can resume a suspended thread. Suspend() is a non-blocking call, meaning that control returns immediately to the caller, and the thread is suspended later, usually at the next safe point. A safe point is a point in the code safe for garbage collection. When garbage collection takes place, .NET must suspend all running threads, so that it can compact the heap, move objects in memory, and patch client-side references. The JIT compiler identifies those points in the code that are safe for suspending the thread (such as returning from method calls or branching for another loop iteration). When Suspend() is called, the thread will be suspended once it reaches the next safe point.

Avoid explicitly suspending or resuming threads.

The bottom line is that suspending a thread is not an instantaneous operation. The need to suspend and then resume a thread usually results from a need to synchronize the execution of that thread with other threads. Using Suspend() and Resume() for that purpose is not recommended, because there is no telling when it will take place. If you need to suspend the execution of a thread, and then later resume it, you should use the dedicated .NET synchronization objects. The synchronization objects provide a deterministic way of blocking a thread or signaling it to continue executing. In general, avoid explicitly suspending or resuming threads.

Putting a Thread to Sleep

The Thread class provides two overloaded versions of the static Sleep() method, used to put a thread to sleep for a specified timeout:

public sealed class Thread

{

public static void Sleep(int

millisecondsTimeout);

public static void Sleep(TimeSpan timeout);

//Other methods and properties

}

Because Sleep() is a static method, you can only put your own thread to sleep:

Thread.Sleep(20);//Sleep for 20 milliseconds

Sleep() is a blocking call, meaning that control returns to the calling thread only after the sleep period has elapsed. Sleep() puts the thread in a special queue of threads waiting to be awakened by the operating system. Any thread that calls Sleep() is willingly relinquishing the remainder of its allocated CPU time slot, even if the sleep timeout is less than the reminder of the time slot. Consequently, calling sleep with a timeout of zero is a way of forcing a thread context switch:

Thread.Sleep(0);//Forces a context switch

If no other thread (with this priority or higher) is ready to run, control will return to the thread.

Avoid putting a thread to sleep, and use .NET synchronization objects instead.

You can also put a thread to sleep indefinitely, using the Infinite static constant of the Timeout class:

Thread.Sleep(Timeout.Infinite);

Of course, putting a thread to sleep indefinitely is an inefficient use of the system services, because it would be better to simply terminate the thread (by returning from the thread method). If you need to block a thread until some event takes place, use .NET synchronization objects. In fact, in general you should avoid putting a thread to sleep unless you specifically want the thread to act as a kind of timer. Traditionally, developers resorted to putting a thread to sleep to cope with race conditions, by explicitly removing some of the threads involved in the race condition. A race condition is a situation where thread T1 needs to have another thread (T2) complete a task or reach a certain state. The race condition occurs when the T1 proceeds as if the T2 is ready, while in fact it may not be. Sometimes the T1 has its own processing to do, and that (in a poorly designed system) will usually keep it busy enough to avoid the race condition. Occasionally, however, the T1 will complete before T2 is ready, and an error will occur. Using Sleep() to resolve a race condition is inappropriate because it does not address the root cause of the race condition, usually, lack of proper synchronization in the first place between the participating threads. In that case, putting threads to sleep is at best a makeshift solution because the race condition could still manifest itself in different ways, and it is not likely to work when more threads get involved. Avoid putting a thread to sleep, and use .NET synchronization objects instead.

Spinning While Waiting

The Thread class provides another sleep-like operation, called SpinWait()

public static void SpinWait(int iterations);

When a thread calls SpinWait(), the calling thread waits the number of iterations specified, and the thread is never added to the queue of waiting threads. As a result, the thread is effectively put to sleep without relinquishing the remainder of its CPU time slot. The .NET documentation does not define what an iteration is, but it is likely mapped to a predetermined number (probably just one) of NOP (no-operations) assembly instructions. Consequently, the following SpinWait() instruction will take different time to complete on machines with different CPU clock speeds:

const long MILLION = 1000000;

Thread.SpinWait(MILLION);

SpinWait() is not intended to replace Sleep(), but is rather made available as an advanced optimization technique. If you know that some resource your thread is waiting for will become available in the immediate future, it is potentially more efficient to spin and wait, instead of using either Sleep() or a synchronization object, because those force a thread context switch, which is one of the most expensive operations performed by the operating system. Even in the esoteric cases for which SpinWait() was designed, using it is an educated guess at best. SpinWait() will gain you nothing if the resource is not available at the end of the call, or if the operating system preempts your thread because its time slot has elapsed, or because another thread with a higher priority is ready to run. In general, I recommend that you should always use deterministic programming (using synchronization objects in this case) and avoid optimization techniques.

Joining a Thread

The Thread class provides the Join() method, which allows one thread to wait for another thread to terminate. Any client that has a reference to a Thread object can call Join(), and have the client thread blocked until the thread terminates. Note that you should always check before calling Join() that the thread you are trying to join to is not your current thread:

void WaitForThreadToDie(Thread thread)

{

Debug.Assert(Thread.CurrentThread.GetHashCode()

!= tread.GetHashCode());

thread.Join();

}

Join() will return regardless of the cause of death?either natural (the thread returns from the thread method) or unnatural (the thread encountered an exception). Join() is useful when dealing with application shutdown?when an application starts its shutdown procedure, it typically signals all the worker threads to terminate, and then the application waits for the threads to terminate. The standard way of doing that is by calling Join() on the worker threads.

Calling Join() is similar to waiting on a thread handle in the Win32 world, and it is likely the Join() method implementation does just that. Unfortunately, the Thread class does not provide a WaitHandle to be used in conjunction with multiple wait operations. This renders Join() inferior to raw Windows programming, because when waiting for multiple events to occur, you want to dispatch the wait request as one atomic operation to reduce the likelihood of deadlocks.

Design so that your code does not depend on the thread being in a particular state.

The Join() method has two overloaded versions, allowing you to specify a waiting timeout:

public sealed class Thread

{

public void Join();

public bool Join(int millisecondsTimeout);

public bool Join(TimeSpan timeout);

//Other methods and properties

}

When you specify a timeout, Join() will return when the timeout has expired or when the thread is terminated, whichever happens first. The bool return value will be set to false if the timeout has elapsed but the thread is still running, and to true if the thread is dead.

Interrupting a Waiting Thread

You can rudely awaken a sleeping or waiting thread by calling the Interrupt() method of the thread class:

public void Interrupt();

Background threads are a poor man's solution for application shutdown.

Calling Interrupt() unblocks a sleeping thread (or a waiting thread, such as a thread that called Join() on another thread), and throws an exception of type ThreadInterruptedException in the unblocked thread. If the code the thread executes does not catch that exception, then the thread is terminated by the runtime. If the thread is not sleeping (or waiting), and a call to Thread.Interrupt() is made, then the next time the thread tries to go to sleep (or wait), then .NET will immediately throw in its call stack the exception of type ThreadInterruptedException. Again, you should avoid relying on drastic solutions such as throwing exceptions to unblock another thread. Use .NET synchronization objects instead, to gain the benefits of structured and deterministic code flow. In addition, calling Interrupt() does not interrupt a thread that is executing unmanaged code via interop. Note that calling Interrupt() does not interrupt a thread that is in the middle of a call to SpinWait(), because that thread is actually not waiting at all (as far as the operation system is concerned).

Aborting a Thread

The Thread class provides an Abort() method, intended to forcefully terminate a .NET thread. Calling Abort() throws an exception of type ThreadAbortException in the thread being aborted. ThreadAbortException is a special kind of exception: even if the thread method uses exception handling to catch exceptions, such as:

public void MyThreadMethod()

{

try

{

while(<some condition>)

{

<Do some work>

}

}

catch

{

//Handle exceptions here

}

}

After the CATCH statement is executed, .NET re-throws the ThreadAbortException to terminate the thread. This is done so that non-structured attempts to ignore the abort by jumping to the beginning of the thread method will simply not work:

//Code that does not work when

//ThreadAbortException is thrown.

public void MyThreadMethod()

{

Resurrection:

try

{

while(<some condition>)

{

<Do some work>

}

}

catch

{

goto Resurrection;

}

}

If Abort() is called before the thread is started, .NET will never start the thread once Thread.Start() is called. If Thread.Abort() is called while the thread is blocked (either by calling Sleep(), or Join(), or if the thread is waiting on one of the .NET synchronization objects), .NET unblocks the thread and throws ThreadAbortException in it. However, you cannot call Abort() on a suspended thread. Doing so will result on the calling side with an exception of type ThreadStateException, with the error message "Thread is suspended; attempting to abort." In addition, .NET will terminate the suspended thread without letting it handle the exception.

The Thread class has an interesting counter-abort method?the static ResetAbort() method:

public static void ResetAbort();

Avoid controlling the application flow by setting Thread priorities.

Calling Thread.ResetAbort() in a CATCH statement prevents .NET from re-throwing ThreadAbortException at the end of the CATCH statement:

catch(ThreadAbortException exception)

{

Trace.WriteLine("Refusing to die");

Thread.ResetAbort();

//Do more processing or even go to somewhere

}

Note that ResetAbort() demands the ControlThread security permission.

Terminating a thread by calling Abort() is not recommended for a number of reasons. The first is that it forces the thread to perform an ungraceful exit. Often the thread will need to release resources it holds and perform some sort of a cleanup before terminating. You can of course handle exceptions, and put the cleanup code in the Finally method, but you typically want to handle the unexpected errors that way, and not use it as the standard way of terminating your thread. Never use exception to control the normal flow of your application?it is akin to non-structured programming using goto.

Second, nothing prevents the thread from abusing .NET and either performing as many operations as it likes in the CATCH statement or jumping to a label or calling ResetAbort(). If you want to terminate a thread, you should do so in a structured manner using the .NET synchronization objects. You should signal the Thread method to exit using a member variable or event.

Calling Thread.Abort() has another liability: if the thread makes an interop call (using COM Interop or P-Invoke), the interop call may take a while to complete. If Thread.Abort() is called during the interop call, .NET will not abort the thread, but lets it complete the interop call, only to abort it when it returns. This is yet another reason why Thread.Abort() is not guaranteed to succeed, or succeed immediately.

Thread States

.NET manages a state machine for each thread, and moves the thread between states. The ThreadState enum defines the set of states a .NET managed thread can be at:

[Flags]

public enum ThreadState

{

Aborted = 0x00000100,

AbortRequested = 0x00000080,

Background = 0x00000004,

Running = 0x00000000,

Stopped = 0x00000010,

StopRequested = 0x00000001,

Suspended = 0x00000040,

SuspendRequested = 0x00000002,

Unstarted = 0x00000008,

WaitSleepJoin = 0x00000020

}

For example, if a thread is in the middle of a Sleep(), Join(), or a wait call on one of the synchronization objects, then the thread will be in the ThreadState.WaitSleepJoin state. .NET will throw an exception of type ThreadStateException when it tries to move the thread to an inconsistent state, such as calling Start() on a thread at the ThreadState.Running state, or trying to abort a suspended thread (ThreadState.Suspended). The Thread class has a public read-only property called ThreadState you can access to find out the exact state of the thread:

public ThreadState ThreadState { get; }

The ThreadState enum values can be bit-masked together, so testing for a given state is done typically as follows:

Thread workerThread;

//Some code to initialize workerThread, then:

ThreadState state = workerThread.ThreadState;

if((state & ThreadState.Suspended) ==

ThreadState.Suspended)

{

workerThread.Resume();

}

However, I don't recommend ever designing your application so that you rely on the information provided by the ThreadState property. You should design so that your code does not depend on the thread being in a particular state. In addition, by the time you retrieve the thread's state and decide to act upon it, the state may have changed. If your thread transitions between logical states (specific to your application) such as beginning or finishing tasks, use .NET synchronization objects to synchronize transitioning between those states. The only exception to this is the knowledge that the thread is alive, required sometimes for diagnostics or control flow. For that reason, the Thread class has the Boolean read-only public property IsAlive that you should use instead of the ThreadState property:

public bool IsAlive { get; }

For example, there is little point in calling Join() on a thread if the thread is not alive:

Thread workerThread;

//Some code to start workerThread, then:

if(workerThread.IsAlive)

{

workerThread.Join();

}

Trace.WriteLine("Thread is dead");

Therefore, in general, you should avoid accessing Thread.ThreadState.

Foreground and Background Threads

.NET defines two kinds of managed threads: background threads and foreground threads. The two thread types are exactly the same except .NET will keep the process alive as long as there is at least one foreground thread running. Put differently, a background thread will not keep the .NET process alive once all foreground threads have exited.

New threads are created as foreground by default. To mark a thread as a background thread, you need to set the Thread object's IsBackground property to true:

public bool IsBackground { get; set; }

When the last foreground thread in the application terminates, .NET shuts down the application. The .NET runtime tries to terminate all the remaining background threads by throwing ThreadAbortException in them. Background threads are a poor man's solution for application shutdown. Instead of designing the application correctly to keep track of what threads it created, which threads are still running and need to be terminated when the application shuts down, a quick and dirty solution is to let .NET try to terminate all those background threads. Normally, you should not count on .NET to kill your background threads for you. You should have a deterministic, structured way of shutting down your application, by doing your own bookkeeping and explicitly controlling the life cycle of your threads and taking steps to shut down all threads on exit.

Thread Priority and Scheduling

Each thread is allocated a fixed time slot to run on the CPU, and it is assigned a priority. In addition, a thread is either ready to run, or it is waiting for some event to occur, such as a synchronization object being signaled or a sleep timeout to elapse. The underlying operating system schedules for execution those threads that are ready to run based on the thread's priority. Thread scheduling is preemptive, meaning that the thread with the highest priority always gets to run. If a thread T1 with priority P1 is running, and suddenly thread T2 with priority P2 is ready to run, and P2 is greater than P1, the operating system will preempt (pause) T1 and allow T2 to run. If multiple threads with the highest priority are ready to run, the operating system will let each run its CPU time slot and then preempt it in favor of another thread with the same priority, in a round-robin fashion.

The Thread class provides the Priority property of the enum type ThreadPriority, which allows you to retrieve or set the thread priority:

public ThreadPriority Priority { get; set; }

The enum ThreadPriority provides five priority levels:

public enum ThreadPriority

{

Lowest,

BelowNormal,

Normal,

AboveNormal,

Highest

}

New .NET threads are created by default with a priority of ThreadPriority.Normal. Developers often abuse thread priority settings as a means to control the flow of a multithreaded application to work around race conditions. Tinkering with thread priorities generally is not an appropriate solution and can lead to some adverse side effects and other race conditions. For example, imagine two threads that are involved in a race condition. By increasing one thread's priority, in the hope that it will run at the expense of the other and win the race, you often just decrease the probability of the race condition, because the thread with the higher priority can still be switched out or perform blocking operations. In addition, does it make sense to always run that thread at a higher priority? That could paralyze other aspects of your application. You could, of course, increase the priority only temporarily, but then you would address just that particular occurrence of the race condition, and remain exposed to future occurrences.

You may be tempted to always keep that thread at a high priority, and increase the priority of other affected threads. Often, increasing one thread's priority causes an inflation of increased thread priorities all around, because the normal balance and time-sharing governed by the operating system is disturbed. The result can be a set of threads, all with the highest priority, still involved with race conditions. The major adverse effect now is that .NET suffers, because many of its internal threads (such as threads used to manage memory, execute remote calls, and so on) are suddenly competing with your threads. In addition, preemptive operating systems (like Windows) will dynamically change threads' priorities to resolve priority inversions situations.

A priority inversion occurs when threads with lower priority run instead of threads with a higher priority. Because .NET threads are currently mapped to the underlying Windows threads, these dynamic changes propagate to the managed threads as well. Consider for example three managed threads T1, T2, T3, with respective priorities of ThreadPriority.Lowest, ThreadPriority.Normal, and ThreadPriority.Highest. T3 is waiting for a resource held by T1. T1 is ready to run to release the resource, except that T2 is now running, always preventing T1 from executing. As a result, T2 prevents T3 from running, and priority inversion takes place, because T3 has a priority greater than that of T2.

To cope with priority inversions, the operating system not only keeps track of thread priorities, but also maintains a scoreboard showing who got to run and how often. If a thread is denied the CPU for a long time (a few seconds), the operating system dynamically boosts that thread's priority to a higher priority, letting it run for a couple of time slots with the new priority, and then sets the priority back to its original value. In the previous scenario, this allows T1 to run, release the resource T3 is waiting for, and then regain its original priority. T3 will be ready to run (because the resource is now available) and will preempt T2. The point of this example and the other arguments is that you should avoid controlling the application flow by setting thread priorities. Use .NET synchronization objects to control and coordinate the flow of your application and to resolve race conditions. Set threads priorities to values other than normal only when the semantics of the application requires it. For example, if you develop a screen saver, its threads should run at priority ThreadPriority.Lowest so that other background operations such as compilation, network access, or number crunching could take place, and not be affected by the screen saver.

The WorkerThread Wrapper Class

The source code accompanying this article contains the WorkerThread class, which is a high-level wrapper class around the basic .NET Thread class. WorkerThread's public methods and properties are:

public class WorkerThread : IDisposable

{

public WorkerThread();

public WorkerThread(bool autoStart);

public WaitHandle Handle{get;}

public void Start();

public void Dispose();

public void Kill();

public void Join();

public bool Join(int millisecondsTimeout);

public bool Join(TimeSpan timeout);

public string Name{get;set;}

public bool IsAlive{get;}

}

WorkerThread provides easy thread creation and other features, including a Kill() method for terminating the thread instead of using Abort(). The potentially dangerous methods of the Thread class are not present in the interface of WorkerThread, and the good ones are maintained. Listing 2 shows the implementation of WorkerThread. Because the Thread class is sealed, I had to use containment rather than derivation when defining WorkerThread. WorkerThread has the m_ThreadObj member variable of type Thread, representing the underlying wrapped thread. You can access the underlying thread via the Thread property of WorkerThread, if you still want direct thread manipulation.

Creating a New Thread

WorkerThread provides a one phase thread creation, because it can encapsulate the use of the ThreadStart delegate. Its constructor accepts a bool value called autoStart. If autoStart is true, the constructor will create a new thread and start it:

WorkerThread workerThread;

workerThread = new WorkerThread(true);

If autoStart is false, or when using the default constructor, you need to call the WorkerThread.Start() method, just like when using the raw Thread class:

WorkerThread workerThread = new WorkerThread();

workerThread.Start();

The thread method for WorkerThread is the private Run() method. In Listing 2, all Run() does is trace the value of a counter to the Output window. WorkerThread provides the Name property for naming the underlying thread.

Joining a Thread and the Thread Handle

WorkerThread provides a Join() method, which safely asserts that calling Join() is done on a different thread from that of the underlying thread, to avoid a deadlock. Join() also verified that the thread is not alive before calling Join() on the wrapped thread. I also wanted to expose a property of type WaitHandle, to be signaled when the thread terminates. For that, WorkerThread has a member variable of type ManualResetEvent, called m_ThreadHandle. The constructors instantiate m_ThreadHandle non-signaled. When the Run() method returns, it signals the m_ThreadHandle handle. To ensure that regardless how the Run() method exits, signaling the handle is done in a FINALLY statement.

WorkerThread provides access to m_ThreadHandle via the Handle property. WorkerThread also provides the Boolean property, IsAlive, which not only calls the underlying thread's IsAlive property, it also asserts that the m_ThreadHandle state is consistent: you can test whether a ManualResetEvent object is signaled by waiting on it for a timeout of zero, and checking the retuned value from the Wait() method.

Killing Thread

One of the most common challenges developers face is the task of killing their worker threads, usually upon application shutdown. As mentioned previously, you should avoid calling Thread.Abort() to terminate your thread. Instead, in each iteration of the thread method, you should check a flag signaling whether to do another iteration or return from the method. As shown in Listing 2, the thread method Run() traces to the Output window the value of a counter in a loop:

int i = 0;

while(EndLoop == false)

{

Trace.WriteLine("Thread is alive,

Counter is " + i);

i++;

}

Before every loop iteration, Run() checks the Boolean property EndLoop. If EndLoop is set to false, Run() performs another iteration. WorkerThread provides the Kill() method, which sets the EndLoop to true, causing Run() to return and the thread to terminate. EndLoop actually gets and sets the value of the Boolean m_EndLoop member variable. Because Kill() will be called on a client thread, you must provide for thread-safe access to m_EndLoop. You can use any of the manual locks: you can lock the whole WorkerThread object using a Monitor, you can use ReaderWriterLock() except that ReaderWriterLock() is excessive for a property that will only be written once. I chose to use a Mutex:

bool EndLoop

{

set

{

m_EndLoopMutex.WaitOne();

m_EndLoop = value;

m_EndLoopMutex.ReleaseMutex();

}

get

{

bool result = false;

m_EndLoopMutex.WaitOne();

result = m_EndLoop;

m_EndLoopMutex.ReleaseMutex();

return result;

}

}

Kill() should return only when the worker thread is dead. To that end, Kill() calls Join().

However, because Kill() is called on the client thread, the WorkerThread object must store as a member variable a Thread object referring to the worker thread. Fortunately, there is already such a member?the m_ThreadObj member variable. You can only store the thread value in the thread method, not in the constructor, which executes on the creating client's thread. This is exactly what Run() does in this line:

m_ThreadObj = Thread.CurrentThread;

Note that calling Kill() multiple times is harmless. Also note that Kill() does the cleanup of closing the mutex. Finally, what if the client never calls Kill()? To answer that, the WorkerThread class implements IDisposable and a destructor, both calling Kill():

public void ~WorkerThread()

{

Kill();

}

public void Dispose()

{

Kill();

}

It is important to understand that Kill() is not the same as Dispose(). Kill() is handling execution flow such as application shutdown or timely termination of threads, whereas Dispose() caters to memory and resource management, and disposing of other resources the WorkerThread class might hold. The only reason Dispose() is calling Kill() is as a contingency, in case the client developer forgets to do it.