There’s an old programmer adage: “First you make it work, then you make it work fast.” Well, when writing T-SQL queries, you can do both, if you have some knowledge about how the SQL Server optimizer works. This will be a two-part article. In part one, I’ll start with fairly basic optimization tips and techniques for writing SQL queries. In part two, I’ll cover more advanced techniques.

What’s on the Menu?

Here are the different performance optimization tips and topics for this article:

- Basic query optimization fundamentals

- An example of Hash Match Aggregate versus Stream Aggregate

- The Baker’s Dozen Spotlight: how the SQL Server 2012 Columnstore index can help

- More information on the Columnstore index

- Queries using dates - what works well, what doesn’t

- Trying to outsmart the optimizer with NULL checks

- Queries that are search-argument optimized and queries that aren’t

- Are correlated subqueries bad?

- New capabilities in SQL Server 2012 - are they faster?

- Recursive queries versus loops

- APPLY and Table-valued functions versus other approaches

- Inserting and updating multiple rows in one procedure, using INSERT and UPDATE

- Inserting and updating multiple rows in one procedure, using MERGE

SQL Server 2012 Released!

While 10 of the 13 tips in this article will apply to SQL Server 2008, three of the tips cover new features in SQL Server 2012, which Microsoft released in the spring of 2012. If you have the opportunity to try out SQL Server 2012, I strongly encourage you to do so. The performance of the new Columnstore index (in Tips 3 and 4) is worth the effort alone!

Most of the example listings in this article use the AdventureWorks2008R2 demo database, which you can find on CodePlex.

Tip 1: Basic Indexing Fundamentals

Have you ever created an index and wondered why SQL Server didn’t appear to utilize it? Consider the code in Listing 1, which does the following:

- Creates a table from the existing Purchasing.PurchaseOrderHeader table, and then builds two indexes: a clustered index on the PurchaseOrderID column (presumably for a Primary Key) and a non-clustered index on the VendorID.

- Retrieves a handful of columns based on a specific VendorID.

So here’s the question: will the query use the non-clustered index on the VendorID, and perform an Index Seek (one of the more efficient operators)? The answer is no, which might surprise some people. The SQL Server execution plan shows that the optimizer performs an Index Scan through the clustered index. So why does SQL Server take what seems to be the “long way around?”

The answer is because the non-clustered index wouldn’t be as “short” a path as you might think. In Listing 1, the code runs the query again, forcing SQL Server to use the non-clustered index. While the execution plan shows that SQL Server performs an Index Seek, the optimizer also performs a Key Lookup into the clustered index, to retrieve all the “other columns” you want to return, and that winds up being far more costly than just scanning through the clustered index to begin with. How costly? If you run the two queries together in a batch, the query that uses the clustered Index Scan costs about 27% of the batch, while the non-clustered Index Seek/Key Lookup costs 73%! So SQL Server is electing to use the clustered Index Scan.

So how can you modify the scenario so that SQL Server will use the more efficient Index Seek - and more important, NOT use the inefficient Key Lookup to retrieve the other columns you want? The answer is with a covering index - where you can store the columns you frequently wish to retrieve “in” the index. The columns (such as the OrderDate, TotalDue, ShipMethodID, etc.) will not be part of the index key, but are instead columns that “come along for the ride.” That way, SQL Server only needs to read the non-clustered index, and doesn’t need to “cross-reference” to the clustered index (or the original heap table) to retrieve all the other columns you want to bring back.

So how can you modify the scenario so that SQL Server will use the more efficient Index Seek - and more important, NOT use the inefficient Key Lookup to retrieve the other columns you want? The answer is with a covering index - where you can store the columns you frequently wish to retrieve “in” the index.

Accordingly, Listing 1 creates a covering index to include the additional columns. If you test all three scenarios (the clustered index, the non-clustered index on just the VendorID, and the covering index on the VendorID that also includes the other columns you want to return), you’ll see that the query costs are 21%, 76%, and 2% respectively. So clearly, a covering index will yield superior performance!

Obviously, covering indexes come at a cost: they are larger, and SQL Server must maintain the additional entries when you add rows. So developers and DBAs should use them judiciously.

Tip 2: An Example of Hash Match Aggregate versus Stream Aggregate

In the example in Listing 1, we retrieved order data for a single vendor ID. Generally speaking, the more selective the query, the more efficiently SQL Server can retrieve the results. But suppose we need to retrieve orders for all vendors? A covering index can still help.

Consider Listing 2, which groups all the orders by vendor and generates a result set that summarizes orders by vendor. As you can imagine, if the source data is in vendor order, SQL Server can more efficiently read (stream) through the orders to aggregate them by vendor.

In Listing 2, the code first summarizes orders by vendor using the same clustered index from Tip 1. Then the code runs the same query using a non-clustered covering index on VendorID. The former query (using the clustered index) costs about 74% of the batch, while the latter query only costs 26% of the batch. Why? A closer look at the execution plans shows that the faster query uses a Stream Aggregate, since the orders are already in VendorID order. By contrast, the first query must use a much less efficient Hash Match, which brings a much greater cost to the query.

Tip 3: The Baker’s Dozen Spotlight: the SQL Server 2012 Columnstore Index

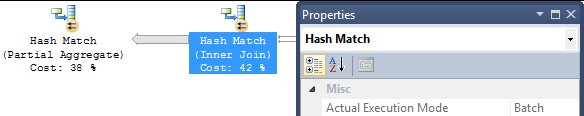

As you saw in Tip 2, the Hash Match is a very costly operator. Historically, the Hash Match for aggregations and the Hash Match for inner joins are usually much less efficient than stream processing of data that is already sorted.

In the March/April 2012 issue of CODE Magazine, I talked about the new Columnstore index in SQL Server 2012. The Columnstore index uses the Xvelocity compression engine to achieve performance enhancements by a factor of 10 or greater. In addition, Microsoft redesigned the underlying logic for the Hash Match operators when using the Columnstore index. As a result, these operators now work in “batch” mode (Figure 1), which can work on batches/“blocks” of roughly 1,000 rows, and in parallel execution mode. Listing 3 shows an example of creating and using a Columnstore index. One note: the Columnstore index is a “readonly” index that cannot be maintained, and is really suited for data warehousing environments.

Tip 4: The SQL Server 2012 Columnstore Index and Outer Joins

Just because you issue a query against a table with a Columnstore index, you are not guaranteed to gain the efficiencies of the new batch mode in SQL Server 2012. For instance, in Listing 4, if you perform an outer join directly on a table with a Columnstore index, SQL Server cannot use batch mode and reverts to the slower row-based mode.

However, all is not lost: later in Listing 4, if we pre-aggregate the data we need into a subquery or common-table expression, and then perform an outer join against the subquery/CTE, we will gain the efficiency of the batch mode again.

Tip 5: Optimizing Date Queries

This next topic will demonstrate another example where new SQL developers sometimes believe they’ll see certain behaviors from SQL Server, only to experience other ones. Listing 5 shows an example of querying on a date column using the YEAR function, when we have an index on the date column. A developer might first think that SQL Server will use an Index Seek to “hone in” on the rows where the year of Order Date is 2007.

Unfortunately, the first query in Listing 5 (which uses the YEAR function) yields an Index Scan instead of an Index Seek. Here’s why, and this is a very important cardinal rule: in order for SQL Server to use an Index Seek, a column must stand alone on one side of a comparison operator. When you pass a date column to a function like YEAR(), SQL Server must scan through all the possible values in the index. By contrast, when you use the BETWEEN statement, the order date column “stands alone” and SQL Server can optimize it with an Index Seek.

In order for SQL Server to use an Index Seek operator, a column must stand alone on one side of a comparison operator.

Tip 6: Trying (and Failing) to Outsmart the Optimizer with NULL Checks

Suppose you have a stored procedure where you want to pass one of two values: either a single scalar parameter or a NULL value. The query in the stored procedure will retrieve rows based on the single value, or all rows if the parameter is NULL.

Listing 6 contains an example. You want to find all the vendors with a certain credit rating, based on a parameter value. If the parameter value is NULL, the query will return all values.

So you try the logic in Listing 6:

SELECT Vendor.Name

FROM Purchasing.Vendor

WHERE @CreditRating is NULL

or CreditRating = @CreditRating

You might believe that by placing the NULL check first, and by using an OR statement, that SQL Server will be able to use an Index Seek when an actual value is passed. Unfortunately, SQL Server cannot optimize this with an Index Seek. SQL Server has to anticipate all possible outcomes here, and uses an Index Scan, regardless of what value is passed. The only way to get an Index Seek is either to use dynamic SQL, or to use IF….ELSE logic (on the parameter being NULL) with two different SQL statements.

Tip 7: Queries that Are Search-Argument Optimized (SARGable) and Queries that Aren’t

Those who have read my Baker’s Dozen articles in the last year will recognize this next tip. Listing 7 shows an example that is similar in concept to the issue in Listing 5. Suppose, based on a date parameter value, you want to find all orders going back the last 7 days. The first query in Listing 7 uses the DATEDIFF function directly on the OrderDate column. Because of the rule I stated back in Tip 5, that the column must appear ALONE on one side of the comparison operator, SQL Server cannot optimize the use of an index on the OrderDate column, and must use an Index Scan.

However, if you rewrite the query to place the OrderDate column on the left side of a comparison operator, and use a Date function on the right side, SQL Server will generate an index seek.

Listing 8 shows another example where using the column name alone on either side of the comparison operator can elevate the execution operator to an Index Seek. If you use the column name alone to the left of a LIKE operator, you can get an Index Seek (assuming you don’t use the ‘%’ wildcard symbol at the beginning of the search string).

Tip 8: Are Correlated Subqueries Bad?

Want to get a DBA’s blood pressure to rise? Tell him/her that you’re using a correlated subquery - visions of SQL Server running a non-optimized query inside a loop of millions of iterations will dance madly in their heads. Fortunately, correlated subqueries aren’t always bad.

A correlated subquery is one where the inside query cannot run on its own - it is tethered to a column on the outside. For this reason, many assume that SQL Server will run the inside subquery for every instance of a row on the outside. In reality, SQL Server will often optimize the query.

Let’s take the query in Listing 9, which retrieves the purchase orders and sales orders and summarizes the dollars by product. Structurally, I’ve used the concepts in this query over the years to demonstrate many different things, such as using subqueries when you have multiple 1-many relationships.

The query also demonstrates how SQL Server will optimize the query if you use two subqueries as common table expressions (or derived table subqueries), OR if you use correlated subqueries to retrieve the summary of purchase orders and sales orders by product. Many would think that the 2nd query (using the correlated subquery) would be significantly slower and carry a much higher query cost.

However, both queries carry an equal cost of 50% when run together. The execution plans are very similar. The first query takes slightly longer for SQL Server to parse and compile, and the second query takes slightly longer to execute. But the general performance of the two is much closer to the same than most people would first realize!

Tip 9: New Analytic Functions in SQL Server 2012

SQL Server 2012 contains many new analytic functions for calculating such items as percentile ranking and median averages. The latter is a personal favorite of mine, as I’ve had to calculate median averages manually in T-SQL for years (due to the lack of any native function in T-SQL).

When a product implements a new function that developers have previously addressed manually, the question is whether the new function will perform as well. Listing 10 shows the “old” way of calculating the median average (“middle score”), by generating a row number value and then performing a straight mean average on the middle row(s). The same listing also shows the “new” way, using PERCENTILE_CONT with a parameter of .5.

So which is faster? First, unless this query is run frequently and by many people, and unless SQL Server can optimize one significantly over the other for a large amount of data, this probably won’t go down in the annals of time as a major debate for either the “incumbent” method or the new method. But as it turns out, the new PERCENTILE_CONT function is slightly slower, carrying a cost of about 52% (with a little more overhead for parsing) compared to 48% for the old ROW_NUMBER method. So while the new function carries a little more overhead and generates a more complicated execution plan with many table spool operations, other factors (approach, readability, interest in using the new functions) might carry more weight in determining which method you choose.

Another example of the new features in SQL Server 2012 is the new OFFSET/FETCH capability for paging result sets. Previously, SQL developers had to generate a ROW_NUMBER and then query against it in a subquery/common table expression. Listing 11 shows both the “new” approach (using OFFSET/FETCH) and the “old” approach using ROW_NUMBER and a subsequent filter. The execution plans and costs are nearly identical, though the older approach takes a few more milliseconds. The result is that developers can feel safe that the new OFFSET/FETCH capability should perform at least as well as the older approaches.

Tip 10: Recursive Queries versus Loops

I’ve always been a fan of the T-SQL features that appeared in SQL Server 2005, particularly recursive queries and the CROSS APPLY operator that I’ll cover in Tip 11. Recursive queries open up all types of opportunities to implement set-based operations in areas where some believe a cursor or procedural loop is necessary.

For example, consider the code in Listing 12, which shows two different ways to populate a date calendar - one using a recursive query and one using a loop. I sometimes ask people, as a friendly non-monetary wager, which method they believe is faster. The majority of people believe the procedural loop will be faster. It isn’t! The recursive query wins hands-down, running in about 50 milliseconds compared to about 550 milliseconds for the procedural loop.

Tip 11: Performance Issues with Table-valued Functions and CROSS APPLY

I’ve built many reporting applications over the years, and I’ve made great use of table-valued functions and CROSS APPLY to generate complex result sets. However, I also realize that these statements carry overhead - and so after my initial zeal in using them, I’ve tried to use them judiciously, and point out to others where they can incur performance penalties.

Listing 13 shows a rather interesting challenge - showing the top 5 orders (based on $ amount) for each vendor. The first approach implements a table-valued function (TVF) that returns the top 5 orders for a single vendor, and then applies that TVF to all the rows in the Vendor table using CROSS APPLY.

The second approach queries the entire Vendor table and Purchase Order header table as a set-based operation, using RANK() and PARTITION BY (to reset the ranking on a change of vendor). Also note that you need to use a subquery to “materialize” the ranking and then filter on it (to get the five best for each vendor). This is necessary for two reasons. First, a TOP 5 statement won’t work when you want the five best “by” a particular column. Additionally, you can’t place a RANK() function in a WHERE clause because the RANK() function reads over a window of rows - such an operation is forbidden by the WHERE clause, which reads one row at a time.

Clearly, you expect the TVF/APPLY approach to be slower - but by how much? As it turns out - plenty. The TVF/APPLY approach costs 91% of the batch when the two run together. This is largely due to a potentially expensive spooling of the data, which transforms the data into a temporary index for seeking with the results of the TVF.

Now, for this query with a few thousand orders, the difference in time is about 200 milliseconds versus 170 milliseconds. But still, on a larger database with a query that might run many times, a developer will need to consider if the modularity/reusability of the TVF will justify the additional overhead.

Tip 12: Inserting and Updating Multiple Rows in One Procedure using INSERT and UPDATE

This topic (both this tip and Tip 13) is one of my personal favorites: I often use this topic as a means to demonstrate the power of the MERGE statement in SQL Server 2008.

Here is the scenario: suppose you have an “incoming” input file of rows (in this example, currency exchange rates for different countries and different dates). The business key is the currency “from” code (e.g. Mexican peso), the currency “to” code (e.g. U.S. dollar), and the date of the exchange rate.

Some of the rows might be new rows you don’t yet have in the production table (Sales.CurrencyRate). Some of the rows might be rows you have - and either the incoming rows have updated (changed) rates, or perhaps the incoming rows are identical (and no update is needed).

Before the MERGE statement came around in SQL Server 2008, developers might use a stored procedure similar to Listing 14. The listing does an INSERT into the target table based on rows that don’t exist from the source, and then a separate UPDATE statement for matching rows (based on the business key) where the rates have changed.

Before SQL Server 2008, this is why developers asked for an “up-sert” function, to accomplish this in one statement.

Tip 13: Inserting and Updating Multiple Rows in One Procedure, using MERGE

Listing 15 finishes this discussion from the previous tip, with the same scenario implemented using a MERGE statement. The MERGE statement allows you to reference a target table, a source table (or derived table subquery), the rules for joining the two to determine a match, and then a series of “when match, when not matched” scenarios.

The MERGE statement is the winner on query cost, running with a cost of roughly 45% compared to the 55% for the INSERT and then UPDATE technique. With 10,000 rows, the actual execution times are very similar, though the MERGE will win out on execution time on larger input streams.

Next Time Around in the Baker’s Dozen

In the grand scheme of SQL Server optimization techniques, this was more of an introductory article, though even experienced developers might have learned a trick or two. In the next installment of the Baker’s Dozen, I’ll cover 13 more advanced scenarios for query optimization.