Calls to services hosted in Azure time out after four minutes. It doesn't matter if they're WCF-style App Services or WebAPI, there's nothing you can do about it. There are no settings to adjust, no options to configure. It's a system constraint set by Microsoft and a reasonable one at that. So how do you handle long-running processes or fire-and-forget operations? The answer is that you can build your own mechanism or you can use some of the many Platform as A Service (PAAS) options that really smart and capable people have built for you and made available to you for prices ranging from free to very, very reasonable.

In this article, I'm going to show you how to program for long-running processes, fire-and-forget operations, event-driven operations, operations that may have to scale up (and down) dramatically, and other scenarios that might be unfamiliar to the typical line-of-business app developer. These solutions can scale automatically from micro to massive. They're modular and shareable. These techniques and technologies are not for everything, but they're fantastic when you need them.

In my last article in CODE Magazine (Sept/Oct 2016), I wrote about generating reports as PDFs, on the server-side via a WebAPI call, and using the Visual Studio report controls. But reports can sometimes take a while to generate and clients don't usually want to sit and wait. What if the user navigates away from the page so the callback for the completed report can't happen? And what if the report takes more than four minutes and the call times out? Today, I'm going to expand on that article and make the report generation asynchronous. That's going to allow me to do some wonderful things like give feedback to the user while the report is being created, let the user do other things (like run other reports) while reports are created, and even let the user generate reports and send them, via email, text or some other method with a fire-and-forget call. As you'll see, I can even trigger reports based on some event happening, without calling the report directly. And if I have a report that takes four hours to put together and generate, it will still work while the user goes about his business.

WebJobs: Create a Sample WebJob

WebJobs were introduced in Azure around 2014 and are a powerful way to run background tasks in the cloud. The background task can be anything from a simple batch file, to a PowerShell script, to a .NET executable that you create. You can even run Node.js, Python and Bash scripts. They can be run on a schedule or triggered by some event. You can think of them as the successors to the old Worker Roles in Azure that pulled jobs off a queue and processed them. WebJobs are built, run, and deployed within an Azure Web app, but don't require a website. Because the cloud reporting project is an ASP.NET MVC website running in Azure, I'm going to add a WebJob to that website that allows users to request that a report is generated and emailed to a user asynchronously in a fire-and-forget operation. The Azure WebJobs SDK is installed as part of the Azure SDK 2.5 and later and includes some QuickStart templates for WebJobs, so if you have the latest Azure SDK installed, you're all set. You can download the source code from the Sept-Oct 2016 article here.

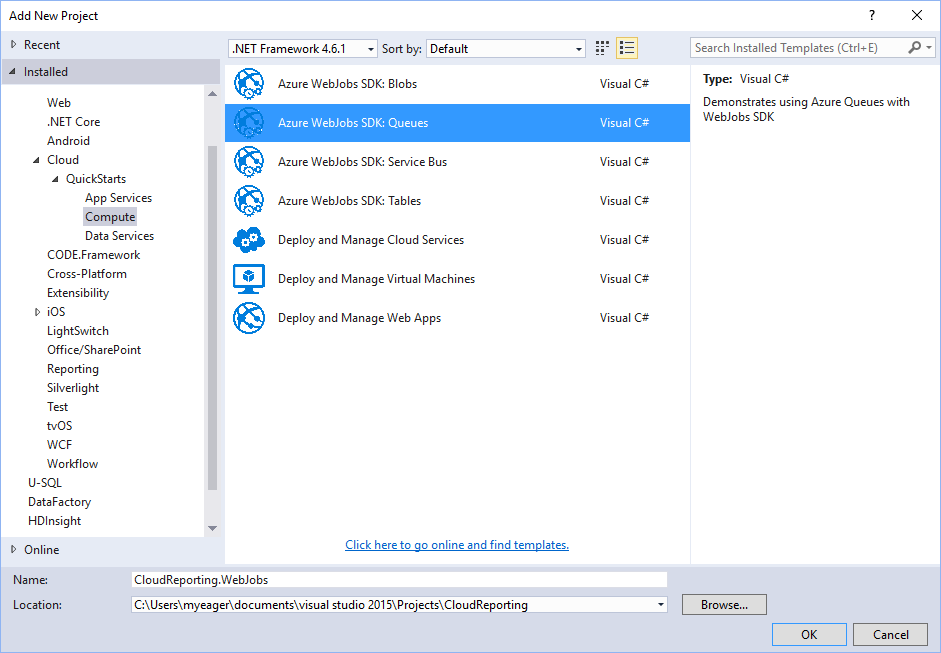

Add a new project to the CloudReporting solution and choose Cloud > QuickStarts > Compute > Azure WebJobs SDK: Queues and name the new project CloudReporting.WebJobs, as shown in Figure 1.

The template automatically adds the appropriate NuGet packages and project references and creates a console application, which is where the report will run. Make the WebJobs project the startup project for the solution and press F5 to run it. You'll see a console window pop up with a message telling you to add your Azure Storage account credentials to the App.config. What's the storage account for? One type of storage in Azure is a queue and you're going to drop messages on that queue whenever a report needs to be generated and emailed. Do you think that it's starting to sound expensive? Well, it isn't. The WebJob is going to run in the existing website and uses its resources. Even the Free pricing tier can run WebJobs. In the last article, I chose the Basic (B1) pricing tier or higher to deploy the CloudReporting website because the nature of generating PDFs prevents you from using the Free or Shared pricing tiers for this purpose. But this has nothing to do with WebJobs. Queue prices start at seven cents per GB, per month, plus 0.3 cents per 100,000 transactions per month. Because you'll be pulling entries off the queue almost as fast as you put them on, and because you won't be running a whole lot of reports a month, I don't expect the queue to add more than a couple of cents to the monthly bill for the expected load.

Do you think that it's starting to sound expensive? Well, it isn't.

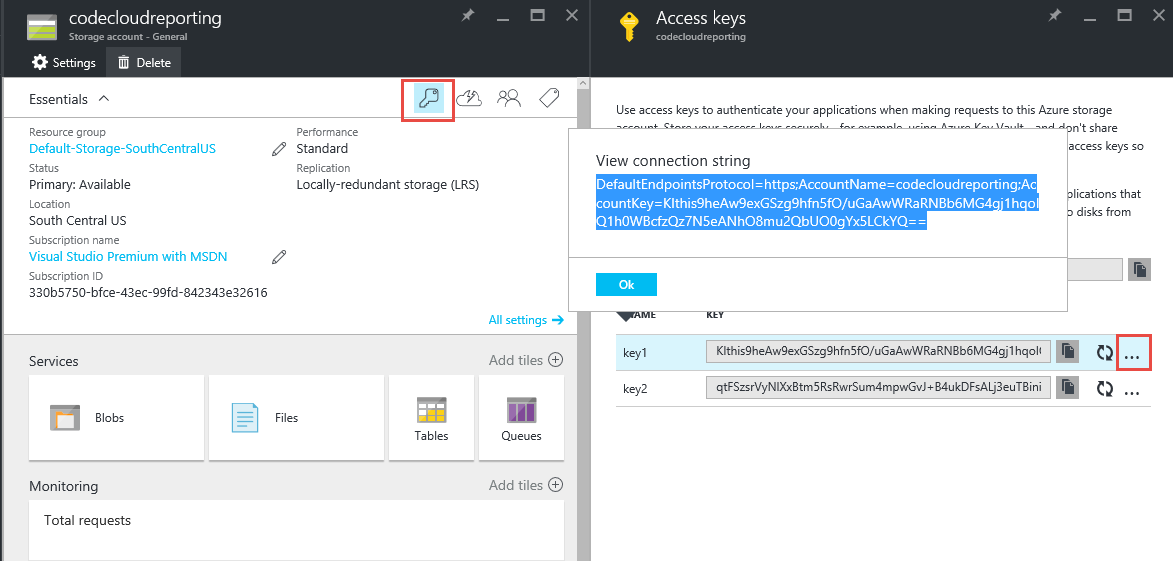

If you haven't done so already, open up the Azure portal at portal.azure.com, log into your account, and click New in the left-hand menu. Under Data + Storage, select Storage Account and give it a name. The name must be unique within .core.windows.net and can only contain lower-case letters and numbers. I called mine codecloudreporting. You can leave most of the defaults. Because this is a development system, I'm going to choose locally-redundant storage (LRS) for my replication because it's the least expensive option and because I don't care that much if I lose some queue entries in the unlikely event that an entire data center goes down. The resource group isn't important for your purposes, but for best performance, do choose a location close to where you are. In my case, I chose South Central US, which is the same location my website runs in and also close to where I work in Houston. Azure takes a minute or two to provision the storage account. Once it's finished, open the storage account in the portal, find the Access keys and click on the ellipses to view the connection string, as shown in Figure 2. Copy and paste this string into both entries in the app.config file. The connection string includes both the endpoint to connect to and the login credentials necessary to connect.

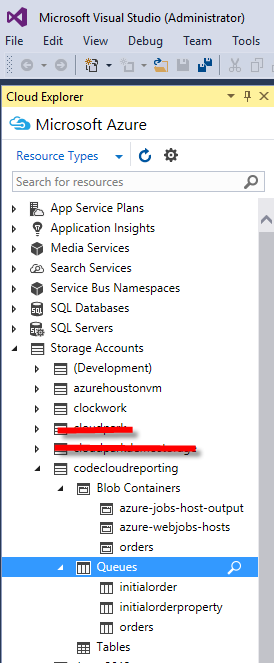

Save the app.config file and press F5 again. This time, the app creates several queues and BLOB containers in the storage account and does some processing. A very good tool to look at Azure Storage accounts is the Cloud Explorer in Visual Studio, as you can see in Figure 3. Because Cloud Explorer is now part of the Azure SDK, you should already have it installed.

If you look at the contents of the queues, you'll find that they're all empty, as all of the sample messages have been processed by the sample code in the WebJob. If you like, you can look at the CreateDemoData method of Program.cs and follow the sample code in Functions.cs to see how the sample messages were processed. The output on the console window shows you the order in which the code was executed. The sample code is a pretty good introduction to using queues in WebJobs.

Modify the Sample

Replace the call to CreateDemoData in the Main method of Program.cs and the method itself with configureQueues(), as shown in Listing 1. Because this code only runs on startup of the WebJob, it's a good place to make sure that your queues are configured prior to listening for events.

Listing 1: CreateDemoData in the Main method of Program.cs

class Program

{

static void Main()

{

if (!VerifyConfiguration())

{

Console.ReadLine();

return;

}

configureQueues();

JobHost host = new JobHost();

host.RunAndBlock();

}

...

private static void configureQueues()

{

var storageAccount = CloudStorageAccount.Parse(

ConfigurationManager.ConnectionStrings["AzureWebJobsStorage"].ConnectionString);

var queueClient = storageAccount.CreateCloudQueueClient();

var queue = queueClient.GetQueueReference("report-request");

queue.CreateIfNotExists();

}

}

You'll notice that the most interesting code in Program.cs are the last two lines of Main that create an instance of JobHost and then call the RunAndBlock method. That's essentially all WebJobs are, a process that loads into memory and then waits for something to trigger a method. All of the interesting processing code can be found in Function.cs, but you aren't going to need the sample code, so you can delete or comment out all of that entire file as well.

Right-click on the project and choose Properties. Change the Assembly name and Defaul namespace to CloudReporting.Webjobs. There appears to be a bug in the project template that prevents these properties from being filled in correctly. If you don't make the changes manually, all of your new files will, by default, be in a different namespace. I expect Microsoft to clean this up in some future version of the project template.

Add a new Class to the WebJobs project and name it RunReport.cs. Add a using statement at the top for Microsoft.Azure.WebJobs. This namespace contains the attributes you're going to add to some of the method parameters as well as some handy classes for building WebJobs. Make the class public and add a new public, static method named ReadReportRequestQueue, as shown in Listing 2.

Listing 2: The public, static method, ReadReportRequestQueue

using Microsoft.Azure.WebJobs;

using System.IO;

namespace CloudReporting.WebJobs

{

public class RunReport

{

public static void ReadReportRequestQueue(

[QueueTrigger("report-request")] string request, TextWriter log)

{

log.WriteLine("Read from Report Request Queue: " + request);

}

}

}

Although most developers have seen attributes applied to classes, methods, and properties, it might be odd to see them applied to method parameters. In this case, the first parameter is a string named request, which has a QueueTrigger attribute applied to it and that performs some magic for us. When this attribute is applied, the WebJobs SDK uses the AzureWebJobsStorage connection string in the app.config file that you configured earlier to log into your Storage account, looks for a queue named Report-Request, and waits for queue entries to show up in the queue. When they do, this method is triggered for you and the payload of the queue entry gets stuffed into the request parameter. That's a lot of plumbing work to get just by adding an attribute to your parameter! There are several attributes like this one that you can use to wire up plumbing. This one listens to an Azure Storage queue, but there are many others. The second parameter is a TextWriter named log that the WebJobs SDK provides for you if you want to use it. There are a few such magic parameters you can add to your method calls, but this is the most common. I suggest downloading the Quick Reference PDF that outlines all of the attributes and special parameter types available in the SDK from webjobs-sdk-quick-reference.pdf.

How does this magic work? When the app is loaded and JobHost.RunAndBlock() is called, the app is scanned (using Reflection) for public classes with public, static methods and the method parameters are examined for type and attributes. The app then listens for the specified events and, when they occur, makes calls to your methods. This is actually pretty similar to how ASP.NET MVC finds startup code, controllers, etc., and wires them up for you. So it's really JobHost that uses Azure's WebHooks, a polling system and/or timers to listen and wait for events that trigger calls to your methods. It's also JobHost that creates and passes the TextWriter to you in a process similar to dependency injection. All of that plumbing work comes from the WebJobs SDK so you can concentrate on writing logic.

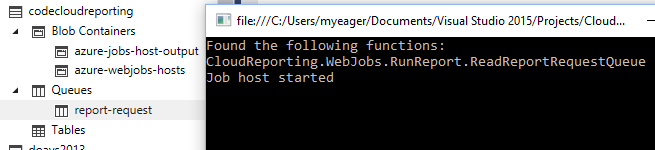

Press F5 again and run the app. There will be a lot less chatter on the screen this time because we're not running the sample code anymore. It only shows that it found your ReadReportRequestQueue function and started running. If you take a look in Cloud Explorer, you should also see that it created the report-request queue that you specified in Main.cs, as shown in Figure 4.

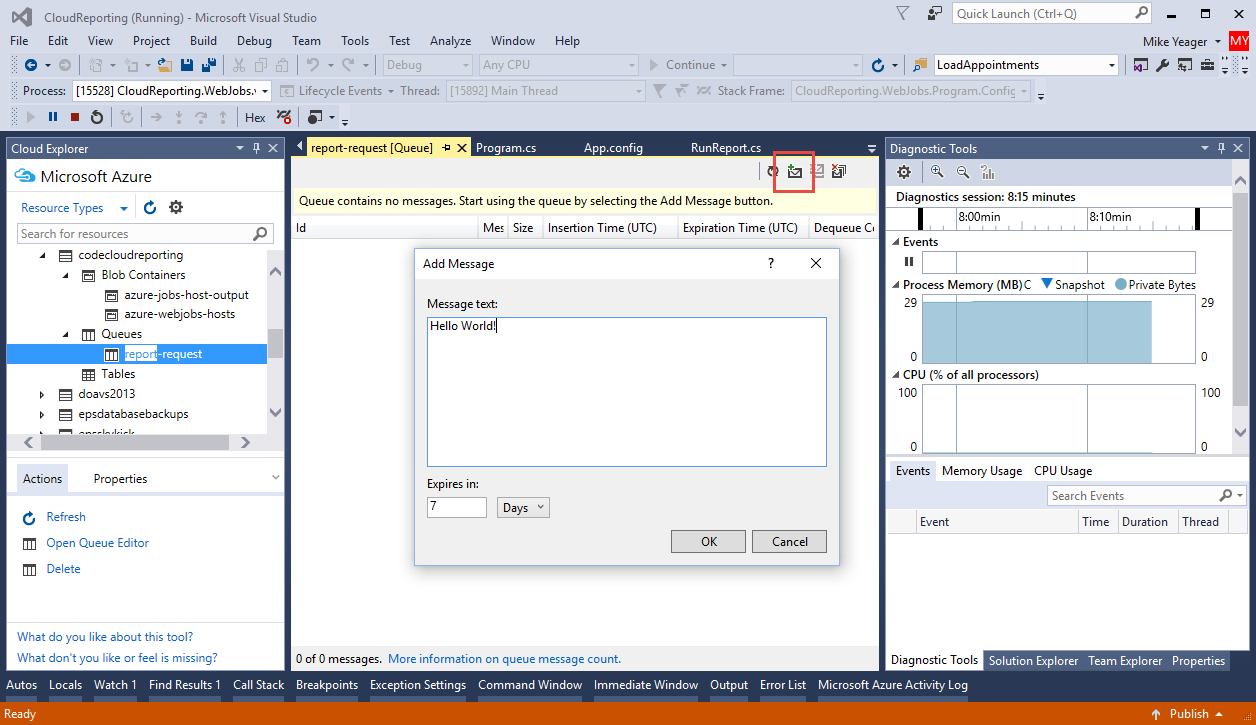

Double-click on the report-request queue in Cloud Explorer, click the Add Message toolbar button, enter some text, and click OK, as shown in Figure 5. You'll see your message added to the queue, and then a few moments later, you'll see a message on the WebJob console window indicating that it saw your queue entry and executed your ReadReportRequestQueue method. If you refresh the queue in Cloud Explorer, the message will be gone.

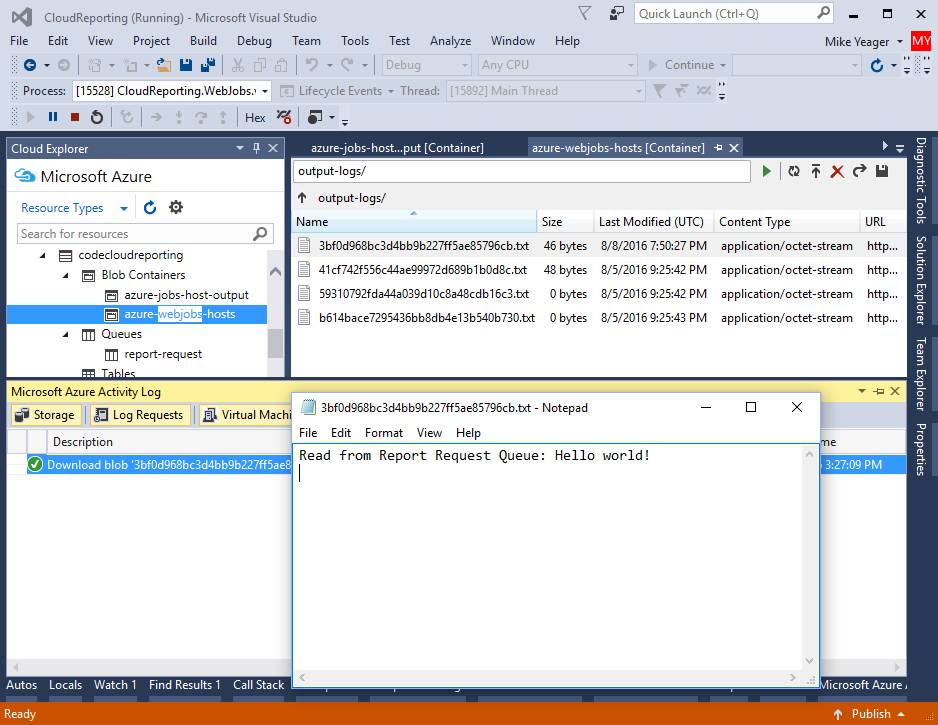

In Cloud Explorer, double-click on the azure-webjobs-hosts Blob Container, open the output-logs folder, and double-click on the most recent entry, as shown in Figure 6. This is where your WebJob log entries are written when you use the TextWriter parameter in a WebJob function. Each entry is the summation of everything written to the TextWriter within the entire function call.

But Wait! There's More!

If that's all that the WebJobs SDK did for you, it would be a lot, but there's more. In this first example, you want to generate a report and email it to someone when it's ready, so it would make sense to pass in an object containing the To and From email addresses and the Subject of the email. You've probably noticed that the queue's payload is a string, but what if you want to pass something more sophisticated? Because JSON can represent complex objects as a string, it's not a big leap to serialize the object into JSON before you put it on the queue and then de-serialize it back into an object before you use it in the function. Because the SDK is already doing a lot of work for you, between spotting new queue entries and calling your functions, it's not surprising that the authors of the SDK thought of this. Deserialization of parameters into objects is handled automatically.

Add a new class to the CloudReporting.Reports project (so that it can be shared with other projects later) and name it ReportParameters. Add public properties for Category, Format, To, From, Subject and SessionId (which you'll use later), as shown in Listing 3.

Listing 3: Adding public properties

namespace CloudReporting.Reports

{

public class ReportParameters

{

public string Category { get; set; } = string.Empty;

public string Format { get; set; } = string.Empty;

public string To { get; set; } = string.Empty;

public string From { get; set; } = string.Empty;

public string Subject { get; set; } = string.Empty;

public Guid SessionId { get; set; } = Guid.Empty;

}

}

Next, add a reference to both the CloudReporting.Reports and CloudReporting.DataRepository projects. Change the request parameter type from string to ReportParameters in RunReport.cs. Add a few logging calls to echo out the values of the properties, as shown in Listing 4. If you get an error on build, check the references for the WebJobs project. If there's a problem with the references to the other projects, it may be because those projects were created with .NET Framework 4.6.1. You may need to update the framework version in the WebJobs project via the project properties.

Listing 4: Add a few logging calls

public static void ReadReportRequestQueue(

[QueueTrigger("report-request")] ReportParameters request, TextWriter log)

{

log.WriteLine("Read from Report Request Queue.");

log.WriteLine(" to: " + request.To);

log.WriteLine(" from: " + request.From);

log.WriteLine(" subject: " + request.Subject);

}

Press F5 to run the WebJob again. Open up the queue in Cloud Explorer and add a new queue entry. This time, for the message, type in the following JSON:

{

To: "myemail@mycompany.com",

From: "system@cloudreporting.com",

Subject: "Here is your report"

}

Once the WebJob is processed, open the log in the azure-webjobs-hosts Blob Container and verify that the data is correct. Note that you may have to refresh the data in Cloud Explorer. Be aware that the maximum message size for a Storage Queue message is 64k, so your serialized JSON payload cannot exceed 64k.

Because you're going to be generating reports in the WebJob, you'll need to add the same Microsoft.ReportViewer.2015 NuGet package to the WebJobs project that you added to the MVC project in the last article. Also, add a reference to System.Web, which is required by the ReportViewer control to work properly. The actual code for generating the report and sending emails can be found in the accompanying source code download for this article. The mechanics of generating the report was well covered in the last article, so I won't list that code here. The logic for using the report in the WebJob can be found in RunReport.cs, as shown in Listing 5.

Listing 5: The RunReport.cs

public static void ReadReportRequestQueue(

[QueueTrigger("report-request")] ReportParameters request, TextWriter log)

{

log.WriteLine("Read from Report Request Queue.");

var pdf = getReport(request.Category, request.Format, log);

if (pdf == null)

{

log.WriteLine("Failed to send report to: " + request.To);

sendEmail(request.From, "helpdesk@mycompany.com", request.Subject,

"Please check the WebJob logs.", null);

return;

}

log.WriteLine("Susscessfully sent report to: " + request.To);

sendEmail(request.From, request.To, request.Subject,

"You must have a PDF reader installed on your machine to open the report.", pdf);

}

You can test the report and email by manually adding an entry to the queue with the following JSON. If you run into an error processing the request, check the logs. It may be because you cut and pasted the JSON and the parser didn't like the fancy quotes. You may want to try typing in the JSON. If you don't receive the email, you may not have replaced the sample email address with your own.

{

Category: "Bikes",

Format: "PDF",

To: "myemail@mycompany.com",

From: "system@cloudreporting.com",

Subject: "Here is your report"

}

Triggering WebJobs from a Service Call

Up until now, you've been manually starting jobs by adding entries to an Azure Storage queue. You could ask your client app developers to use the Azure Storage queue REST API to add new requests to the queue, but it'll be easier for them if you just add service calls to the existing WebAPI services and have the services communicate with the queues.

Because you'll be working with Azure Storage Queues in the service calls, add the WindowsAzure.Storage NuGet package to the MVC project. It's already being used in the WebJobs project, thanks to the WebJobs project template that you used. Open ReportController.cs in the MVC project and add a new Get method with three string parameters: category, format and email. The code you use to connect to the queue is almost identical to the code you used to initialize the queue in Program.cs in the WebJobs project, so the code in Listing 6 should look familiar.

Listing 6: Connect to the queue

public EmailReportResponse Get(string category, string format, string email)

{

try

{

var response = new EmailReportResponse();

var parameters = new ReportParameters

{

Category = category,

Format = format,

To = email,

From = "system@cloudreporting.com",

Subject = "Here is your report"

};

var storageAccount = CloudStorageAccount.Parse(

ConfigurationManager.ConnectionStrings["AzureWebJobsStorage"].

ConnectionString);

var queueClient = storageAccount.CreateCloudQueueClient();

var queue = queueClient.GetQueueReference("report-request");

queue.AddMessage(new CloudQueueMessage(

JsonConvert.SerializeObject(parameters)));

response.Success = true;

return response;

}

catch (Exception ex)

{

//Log the error here.

return new EmailReportResponse

{

Success = false,

FailureInformation = "Could not queue report."

};

}

}

public class EmailReportResponse

{

public bool Success { get; set; } = false;

public string FailureInformation { get; set; } = string.Empty;

}

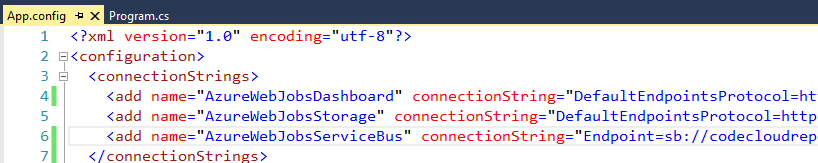

Copy the two connection strings related to Storage Queues from the app.config in the WebJobs project into the web.config in the MVC project. Not only will the service call in the MVC project need this information to find the queue, when you later deploy the WebJobs as part of the MVC application, the WebJobs reads their settings from the web.config and not from their own app.config files.

Because you're adding a new GET WebAPI call with a third parameter, make sure to allow for up to three optional parameters in the routeTemplate in WebAPIConfig.cs. In fact, you're going to add a fourth sessionId parameter as well because you'll be using that later, as shown in Listing 7.

Listing 7: Add a fourth sessionID parameter

public static void Register(HttpConfiguration config)

{

config.SuppressDefaultHostAuthentication();

config.Filters.Add(new HostAuthenticationFilter(

OAuthDefaults.AuthenticationType));

config.MapHttpAttributeRoutes();

config.Routes.MapHttpRoute(

name: "DefaultApi",

routeTemplate: "api/{controller}/{category}/{format}/{email}/{sessionId}/",

defaults: new { category = RouteParameter.Optional,

format = RouteParameter.Optional,

email = RouteParameter.Optional,

sessionId = RouteParameter.Optional }

);

}

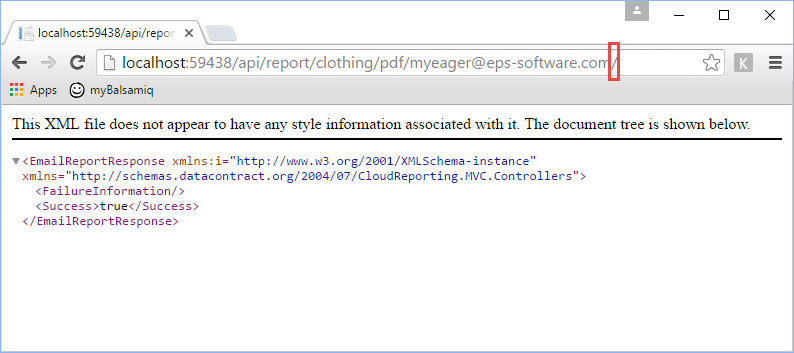

Right-click on the solution and choose Set Startup Projects... Choose Set Multiple Startup Projects, and set both the MVC and WebJobs project to start. When you run the code, you can test the WebAPI call by adding /api/report/clothing/pdf/myemail@mycompany.com/ to the site URL. Most modern browsers URL-encode the @ symbol in the email address for you, but if your browser doesn't, you can replace @ with %40. Make sure to end the URL with a forward slash so that both the @ and period in the email address are handled properly, as shown in Figure 7. Don't forget to use your email address in the URL. The service call returns almost instantly with success, as long as the request can be put on the queue. Success, in this case, doesn't indicate that the report has run or will run successfully, only that the request is successfully queued and that is a very fast operation.

Using Service Bus Queues for Better Communication

Up until now, you've used WebJobs to run potentially long running tasks outside of the client's process and used Azure Storage queues to trigger those tasks. This is a powerful pattern, but it also has some limitations. The nature of Storage queues is that a client drops a message on a queue in a fire-and-forget fashion, and then the message is picked up by a single instance of a WebJob or by any one of an army of dozens, hundreds, or even thousands of WebJob instances. This gives you a fast user experience, allows you to achieve immense scale, and to size and scale the WebJobs separately from the rest of the system. But what if you want to go beyond fire-and-forget and communicate between the client and the WebJob to get status updates and/or be notified when a process is complete? Could you have the WebJob put status messages on a response queue to be picked up by the caller? Storage queues don't allow you to direct a message to a particular consumer. Consumers of a Storage queue can't ask for only messages pertaining to them and they can't inspect the entire queue and grab certain messages from it. This type of queue is designed only to hand out messages from the top of the queue to whatever process asks for those messages. It's not designed for point-to-point communication, but there's another type of queue that can do this.

Service Bus queues are considerably more capable than Storage queues

Service Bus queues are considerably more capable than Storage queues and the most interesting feature of Service Bus queues, for my purposes, is the Session. To use sessions, the client passes a SessionId (a string) to the WebJob as a regular parameter. The WebJob stamps all of the messages it puts on the response queue with the SessionId it was passed. The client can then pick up all response messages stamped with that SessionId.

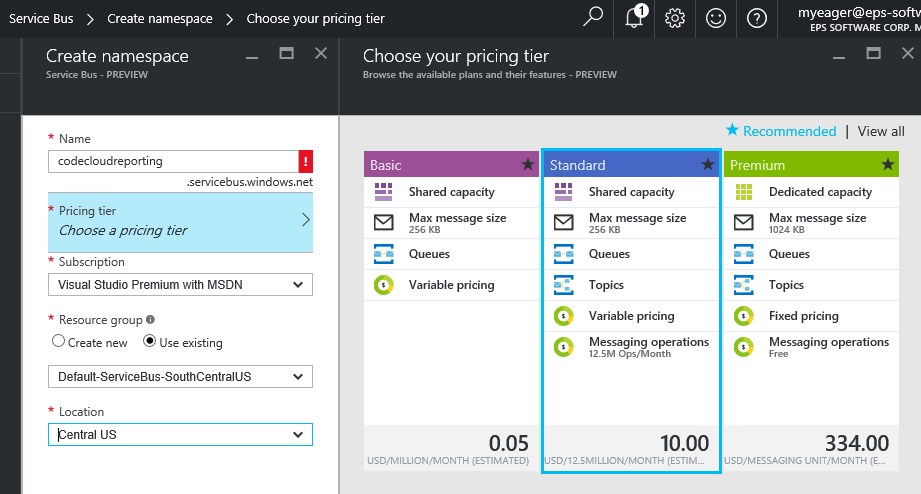

Start by creating a new Service Bus Namespace in the Azure portal. I named mine codecloudreporting. Choose the Standard pricing plan that costs about ten dollars per 12.5 million messages per month. The Basic tier doesn't support Sessions. Choose the location nearest to you for best performance, as shown in Figure 8. As I'm writing this article, access to Service Bus within the new portal has just gone into Preview. I've experienced some issues and limitations that I hope will be worked out by the time you read this. If not, you may have to use the old portal for some things, as I did. As always, when viewing pricing tiers in the portal, you may have to switch from Recommended to View All in order to see all of the pricing tier options available to you.

You may have to refresh the screen to see the new Service Bus Namespace. Once it appears, open the Settings, click on Shared access policies, and then open the RootManageSharedAccessKey and copy the Connection String Primary Key to your clipboard. Open the app.config file for the WebJobs project and add a new connection string with name="AzureWebJobsServiceBus" and connectionstring="<paste your connection string here>", as shown in Figure 9. Don't remove the existing connection strings, as the SDK uses these for logging, even if your code is using Service Bus. I've noticed that some of the newer NuGet packages add an example key to the appSetting section instead of the connectionStrings section. Either method should work by modifying where you look in the .config file, but the sample code expects it in the connectionStrings section. Copy the same connection string into the web.config file of the MVC project so that it's available to the WebJob when you deploy to Azure.

Right-click on the solution and add the NuGet package Microsoft.Azure.Webjobs.ServiceBus to both the WebJobs and MVC projects. This package extends the plumbing that you used earlier to automatically attach to an Azure Storage queue by applying the QueueTrigger attribute to a parameter in the WebJob. It adds some additional attributes that work with Azure Service Bus in a similar way. It also adds the classes that you need to manage Service Bus queues.

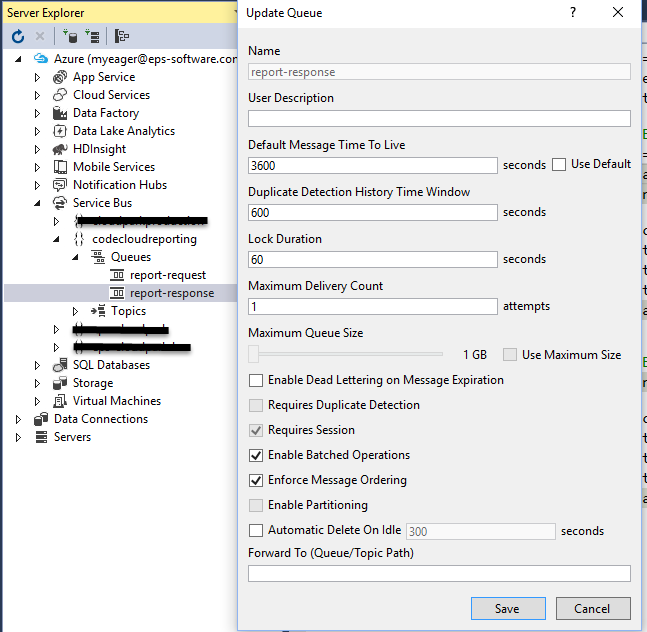

Edit the configureQueues method in Program.cs in the WebJobs project to create the request and response Service Bus queues if they don't already exist, as shown in Listing 8.

Listing 8: Create the request and response Service Bus queues

private static void configureQueues()

{

//Azure Storage queue

var storageConn = ConfigurationManager.ConnectionStrings[

"AzureWebJobsStorage"].ConnectionString;

var storageAccount = CloudStorageAccount.Parse(storageConn);

var queueClient = storageAccount.CreateCloudQueueClient();

var queue = queueClient.GetQueueReference("report-request");

queue.CreateIfNotExists();

//Azure Service Bus Request Queue

var servBusConn = ConfigurationManager.ConnectionStrings[

"AzureWebJobsServiceBus"].ConnectionString;

var namespaceManager = NamespaceManager.

CreateFromConnectionString(servBusConn);

if (!namespaceManager.QueueExists("report-request"))

{

var queueDescription = new QueueDescription("report-request");

queueDescription.RequiresSession = false;

queueDescription.MaxDeliveryCount = 1;

queueDescription.DefaultMessageTimeToLive = new TimeSpan(1, 0, 0);

namespaceManager.CreateQueue(queueDescription);

}

//Azure Service Bus Response Queue

if (!namespaceManager.QueueExists("report-response"))

{

var queueDescription = new QueueDescription("report-response");

queueDescription.RequiresSession = true;

queueDescription.MaxDeliveryCount = 1;

queueDescription.DefaultMessageTimeToLive = new TimeSpan(1, 0, 0);

namespaceManager.CreateQueue(queueDescription);

}

}

Notice that the RequiresSession property is set to false for the request queue and set to true for the response queue. This allows any number of instances of the WebJob to process the initial request from the request queue but it allows the client to ask only for messages from the response queue that are stamped with the client's SessionId. Messages in both queues will be killed off if they're not processed within one hour. This keeps orphaned messages from accumulating in the queue forever if there's a problem with the system. Also MaxDeliveryCount is set to one so that any failed deliveries won't be retried.

Edit the Main method by calling JobHost with an instance of JobHostConfiguration, directing it to use Service Bus queues, as shown in Listing 9.

Listing 9:Tell the JobHost to use the Service Bus queues

static void Main()

{

if (!VerifyConfiguration())

{

Console.ReadLine();

return;

}

configureQueues();

var config = new JobHostConfiguration();

config.UseServiceBus();

config.Queues.BatchSize = 1;

JobHost host = new JobHost(config);

host.RunAndBlock();

}

Press F5 to run and create the queues. Open Server Explorer (not Cloud Explorer) in Visual Studio and connect to your Azure account, if you haven't already. Open the Service Bus node and find your queues. Server Explorer is a handy tool to use when developing and testing with Azure Service Bus, allowing you to test sending and receiving messages. It also allows you to Update a queue, as shown in Figure 10, giving you the most comprehensive tool for modifying queues that I've found. Oddly, Cloud Explorer has only limited support for Service Bus.

Open RunReport.cs and change the QueueTrigger attribute to a ServiceBusTrigger attribute. The name of the queue is the same. At this point, you've replicated the functionality that you had with Storage queues, allowing the WebJob to pick up requests from the request queue and process them. Next, you'll edit the code in the RunReport class to write responses on the response queue that can be retrieved by the original caller.

Add a new class to the Reports project, as shown in Listing 10. This class contains the structure of the response queue messages the WebJob sends back to the client.

Listing 10: Add a new class to the Reports project

public class ReportStatusResponse

{

public bool IsProcessComplete { get; set; } = false;

public string Message { get; set; } = string.Empty;

public int Count { get; set; } = 0;

public int TotalCount { get; set; } = 0;

}

Again, you let the WebJobs SDK take care of the plumbing for you to get access to the response queue. You simply add a parameter to the ReadReportRequestQueue method that is of type ICollector<BrokeredMessage> and has the attribute [ServiceBus("report-response")]. The SDK connects to the queue and passes a parameter that you can use to write entries into the queue, as shown in Listing 11.

Listing 11: The SDK connect to the queue and passes a parameter

public static void ReadReportRequestQueue([ServiceBusTrigger("report-request")]

ReportParameters request, [ServiceBus("report-response")]

ICollector<BrokeredMessage> responseQueue, TextWriter log)

{

var sessionId = request.SessionId.ToString();

var msg = "Read from Report Request Queue.";

log.WriteLine(msg);

var queueMsg = new ReportStatusResponse { Message = msg };

responseQueue.Add(new BrokeredMessage(queueMsg) { SessionId = sessionId });

var pdf = getReport(request.Category, request.Format, responseQueue,

sessionId, log);

if (pdf == null)

{

msg = "Failed to send report to: " + request.To;

log.WriteLine(msg);

queueMsg = new ReportStatusResponse { Message = msg,

IsProcessComplete = true };

responseQueue.Add(

new BrokeredMessage(queueMsg) { SessionId = sessionId });

sendEmail(request.From, "helpdesk@mycompany.com", "Emailing failed",

"Please check the WebJob logs.", null);

return;

}

sendEmail(request.From, request.To, request.Subject,

"You must have a PDF reader installed on your machine to

open the report.", pdf);

msg = "Successfully sent report to: " + request.To;

log.WriteLine(msg);

queueMsg = new ReportStatusResponse { Message = msg,

IsProcessComplete = true };

responseQueue.Add(new BrokeredMessage(queueMsg) { SessionId = sessionId });

}

Notice that you can add messages to the response queue by simply adding a new instance of BrokeredMessage to responseQueue. BrokeredMessage serializes whatever class is passed into its constructor. When putting BrokeredMessages on the response queue, you specify the SessionId passed in by the original caller so that caller can request only status messages on the response queue intended for them. For demonstration purposes, I've also added some code to the getData method to simulate a long process, which sends ten status update messages and includes a Thread.Sleep call that pauses for two seconds between each message.

Now that the WebJob can respond to the request queue and post status updates on the response queue, you need to update the services to point to the Service Bus queues instead of the Storage queue. Open ReportController.cs in the MVC project and add static fields and properties for the service bus connection string, NamespaceManager and MessageFactory so that you don't have to repeat that code in every method call, as shown in Listing 12.

Listing 12: Add static fields and properties for the service bus connection string

public class ReportController : ApiController

{

private static string _servicesBusConnectionString =

ConfigurationManager.ConnectionStrings["AzureWebJobsServiceBus"].

ConnectionString;

private static NamespaceManager _namespaceMgr = null;

protected static NamespaceManager NamespaceMgr

{

get

{

if (_namespaceMgr == null) _namespaceMgr =

NamespaceManager.CreateFromConnectionString(

_servicesBusConnectionString);

return _namespaceMgr;

}

}

private static MessagingFactory _msgFactory = null;

public static MessagingFactory MsgFactory

{

get

{

if (_msgFactory == null) _msgFactory = MessagingFactory.Create(

NamespaceMgr.Address, NamespaceMgr.Settings.TokenProvider);

return _msgFactory;

}

}

}

Add a fourth Get method to ReportController.cs, which takes a fourth parameter and returns an EmailReportResponse, as shown in Listing 13.

Listing 13: Add a fourth Get method to ReportController.cs

public EmailReportResponse Get(

string category, string format, string email, Guid sessionId)

{

try

{

var response = new EmailReportResponse();

var parameters = new ReportParameters

{

Category = category,

Format = format,

To = email,

From = "system@cloudreporting.com",

Subject = "Here is your report",

SessionId = sessionId,

};

var queueClient = MsgFactory.CreateQueueClient("report-request");

queueClient.Send(new BrokeredMessage(parameters));

response.Success = true;

return response;

}

catch (Exception ex)

{

//Log the error here.

return new EmailReportResponse

{

Success = false,

FailureInformation = "Could not queue report.",

};

}

}

Notice that this method passes the sessionId as a parameter to the WebJob. Add a new WebAPI service call to the MVC project to retrieve status updates by adding a new method to ReportController.cs, then add the GetQueuesReportStatusResponse class below, as shown in Listing 14. This code connects to the response queue, asks for any messages on the queue that have the specified SessionId, and returns all of the messages to the caller.

Listing 14: Add the GetQueuesReportStatusResponse class

public GetQueuedReportStatusResponse GetQueuedReportStatus(

Guid sessionId)

{

try

{

var response = new GetQueuedReportStatusResponse();

var queueClient = MsgFactory.CreateQueueClient("report-response",

ReceiveMode.ReceiveAndDelete);

var messageSession = queueClient.AcceptMessageSession(

sessionId.ToString(), new TimeSpan(100));

var brokeredMessage = messageSession.Receive(new TimeSpan(1));

while (brokeredMessage != null)

{

response.Messages.Add(brokeredMessage.

GetBody<ReportStatusResponse>());

brokeredMessage = messageSession.Receive(new TimeSpan(1));

}

messageSession.Close();

response.Success = true;

return response;

}

catch (Exception ex)

{

//Log the error here.

return new GetQueuedReportStatusResponse

{

Success = false,

FailureInformation = "Could not get queued report status."

};

}

}

public class GetQueuedReportStatusResponse

{

public bool Success { get; set; } = false;

public string FailureInformation { get; set; } = string.Empty;

public List<ReportStatusResponse> Messages { get; set; } =

new List<ReportStatusResponse>();

}

Because this call expects a GUID as a parameter, you have to update WebApiConfig.cs with a new route, as shown in Listing 15. If you fail to do this, the parameter is interpreted as a string and is sent to the original GET method of the Report controller, which returns the raw data for the report. Make sure to put this route above the default route so that it gets evaluated first.

Listing 15: Update WebApiConfig.cs with a new route

config.Routes.MapHttpRoute(

name: "statusUpdatesApi",

routeTemplate: "api/{controller}/{sessionId}/",

defaults: new { },

constraints: new

{

sessionId =

@"^[{(]?[0-9A-F]{8}[-]?([0-9A-F]{4}[-]?){3}[0-9A-F]{12}[)}]?$"

} //must be a Guid

);

Incorporate WebJobs into the Sample Web Application

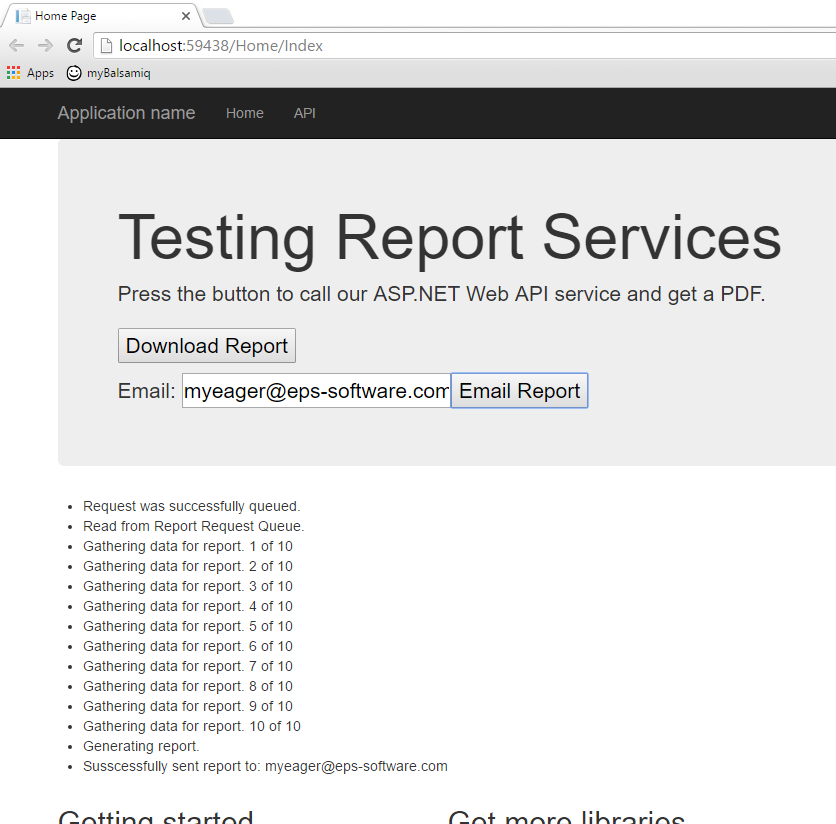

Up to this point, you haven't updated the ASP.NET client application to work with the new WebJobs capability of sending the report by email and receiving progress notifications. Open Index.cshtml in the Views > Home folder of the MVC project. Add an input box for an email address, a button to email the report and an unordered list to display status messages, as shown in Listing 16.

Listing 16: Add an input box

<div class="jumbotron">

<h1>Testing Report Services</h1>

<p class="lead">Press the button to call our

ASP.NET Web API service and get a PDF.</p>

<p><button onclick="DownloadReport()">Download Report</button></p>

<p>Email: <input id="emailAddress" /> <button onclick="EmailReport()">

Email Report</button></p>

</div>

<div class="row">

<ul id="statusMessages"></ul>

</div>

Inside the script tag, below the DownloadReport function, add the two functions shown in Listing 17.

Listing 17: Add two functions inside the script tag

function EmailReport() {

var email = $("#emailAddress").val();

var sessionId = generateGUID();

$.ajax({

url: "/api/report/bikes/pdf/" + email + "/" + sessionId + "/",

success: function (result) {

if (result.Success) {

$("#statusMessages").empty();

$("#statusMessages").append(

"<li>Request was successfully queued.</li>");

GetStatusMessages(sessionId);

}

else {

alert(result.FailureInformation);

}

},

error: function (xhr, status, error) {

alert(error);

}

});

}

function GetStatusMessages(sessionId) {

var waitingOnLastResponse = false;

var timerHandle = setInterval(function () {

if (waitingOnLastResponse == false) {

waitingOnLastResponse = true;

$.ajax({

url: "/api/report/" + sessionId + "/",

success: function (statusResult) {

if (statusResult.Success) {

for (var i in statusResult.Messages) {

var currentMessage = statusResult.Messages[i];

var display = currentMessage.Message;

if (currentMessage.Count > 0) { display = display + " " +

currentMessage.Count + " of " + currentMessage.TotalCount; }

$("#statusMessages").append("<li>" + display + "</li>");

if (currentMessage.IsProcessComplete) {

clearInterval(timerHandle);

}

}

waitingOnLastResponse = false;

}

else {

alert(statusResult.FailureInformation);

}

},

error: function (xhr, status, error) {

alert(error);

}

})

}

}, 2000);

}

The EmailReport function queues up a request for the WebJob, passing along the email address entered by the user and a GUID used as a unique session ID. JavaScript and JQuery don't have native functions to create GUIDs, so I downloaded the generateGUID function from the Internet. If the call is successful, it passes the same GUID as the SessionId to the GetStatusMessages function, which polls the WebAPI service for status updates and displays the status messages in the unordered list. Once the function receives a message indicating that the process is complete, it exits the polling loop. This code doesn't contain a lot of error handling, so that it could be kept as simple as possible for this example.

Press F5 to run the solution. Enter an email address in the textbox and press the button to test the app. The results should look something like Figure 11.

Deploy to Azure

The final step is to deploy the updated website and the new WebJobs project to Azure. One option often used in production is to use the Publish wizard on the WebJobs project to deploy the WebJobs independently from the website. In production, this is often the best choice, as it not only allows you to deploy WebJobs independently, but also allows you to scale them independently. For the purposes of this sample, you're going to bundle the website and the WebJobs so that they're deployed together and run within the same App Service. Right-click on the MVC project, choose Add > Existing Project as Azure WebJob. You'll have to either remove the period from the WebJob name or replace it with a dash to make the name valid. Set the run mode to Run Continuously. This mode runs the WebJob immediately upon deployment so that the WebJob can begin monitoring the queues. If you look under the Properties node in the MVC project, you'll see that all it does is add a webjobs-list.json file to the project. You can edit this file if you want to change any of your selections. If you didn't copy the connection strings from the app.config file in the WebJobs project to the web.config file in the MVC project, now's your chance. When you deploy WebJobs as part of a Web application, they become part of the Web app and only the web.config is deployed.

If you didn't copy the connection strings from the

app.configfile in the WebJobs project to theweb.configfile in the MVC project, now's your chance.

If you deployed the project from the previous article to Azure, your Publish profile is already set up. Otherwise, step through the Publish wizard for the MVC app to create a new App Service in Azure and deploy to it. Make sure that the App Service you deploy to is not set to the Free or Shared pricing tier. Use at least the Basic tier. This comes from a limitation in rendering reports using the Visual Studio reporting control and not from WebJobs or Service Bus queues. After a successful deployment, a browser comes up showing the website running in Azure. Type in an email address and send the email. There is a strong chance that the process will fail.

Because you didn't include any robust error handling in the WebJobs project, you should check the WebJobs log for clues. Open the Azure Portal, navigate to the App Service and click on WebJobs under the settings section to see the deployed WebJob and its current status, which should be Running. Select the WebJob and click on Logs in the title bar. As I write this, I'm getting an erroneous error message that the AzureWebJobsDashboard connection string is missing or invalid, however when I click on the Toggle Output button, I can see the trace logger messages indicating that, in my case, the issue is being caused by Azure not being able to connect to my local email server. After changing my email server to point to the free SendGrid account I set up in Azure, the app works as expected.

Conclusion

In this article, you started with a problem: How to handle long-running service calls that timed out on users and how to handle such calls on a massive scale without having to scale up the entire app. You imagined a long-running report that could not only cause timeouts for end users, but could also bog down the Web servers if too many clients wanted to run these reports. I introduced WebJobs as a way to offload the long-running process, allowing users to trigger report generation, then go about their business without having to wait for the report to finish. Splitting out the long-running process in a WebJob also allowed you to run these tasks on the same or different hardware if you chose, and allowed you to scale both up and out, independent of the website and WebAPI service calls. To trigger the long-running reports, you used Azure Storage queues to communicate with the WebJobs. To make things easy for the end user application developers, you wrapped all of this up behind a WebAPI call so that they wouldn't have to know anything about queues or WebJobs. They could continue developing against familiar WebAPI calls as they always had. Not content with fire-and-forget calls, you changed from using Azure Storage queues to more powerful Azure Service Bus queues, which allowed your WebJobs to send status messages about the progress of the long running jobs so that the end user applications could be updated as work progressed, if they chose.

Finally, you updated the sample end-user client application, an ASP.NET MVC website to make use of the new features, and then published everything to Azure for testing, QA, and eventually for final deployment. Along the way, you discovered some useful tools like Visual Studio's Cloud Explorer and Server Explorer that make working with Azure a whole lot easier. I only touched the surface of the capabilities of WebJobs and the Azure Service Bus, and the app isn't quite ready for production use, but you covered a lot of ground and ended up with a concrete example that can be modified and extended to address a lot of common problems facing modern application developers. In future articles, I hope to cover some of the advanced topics, like Functions (the next level of WebJobs) and Service Bus Topics (a different take on queues). Until then, have fun adding new tools to your Azure toolbox.